Enseñar tecnología en comunidad

Cómo crear y dar lecciones que funcionen

y construir una comunidad docente a su alrededor

Taylor & Francis, 2019, 978-0-367-35328-5

Dedicatoria

Para mi madre, Doris Wilson,

que enseñó a cientos de niñas y niños a leer y creer en sí mismas/os.

"Recuerda, todavía tienes muchos momentos buenos frente a ti.'' La traducción de este libro al español está dedicada

a la memoria de Rebeca Cherep de Guber. Todas las regalías de la venta de este libro (versión en español) se donan a

MetaDocencia, una organización basada en trabajo voluntario

que enseña a docentes de habla hispana de todo el mundo

a enseñar de forma efectiva usando prácticas basadas en evidencia.

Las reglas

Sé amable: todo lo demás son detalles.

Recuerda que tú no eres tus estudiantes…

…que la mayoría de la gente prefiere fracasar antes que cambiar…

…y que el 90% de la magia consiste en saber una cosa más (que tu público).

Nunca enseñes solo/a.

Nunca dudes en sacrificar la verdad por la claridad.

Haz de cada error una lección.

Recuerda que ninguna clase sobrevive al primer contacto con estudiantes…

…que cada clase es demasiado corta para quien enseña y demasiado larga para quien la recibe…

…y que nadie tendrá más entusiasmo que tú por tu clase.

Laura Acion Yanina Bellini Saibene y Yara Terrazas-Carafa

Sobre la traducción

Este es el sitio web de la versión en español de Teaching Tech Together de Greg Wilson. La traducción de Enseñar Tecnología en Comunidad es un proyecto colaborativo de voluntarias de la comunidad de MetaDocencia y R-Ladies en Latinoamérica, que tiene por objetivo traducir al español material actualizado y de calidad para hacerlo accesible a hispanohablantes. Iniciamos el proceso de traducción en marzo del año 2020 y lo completamos en marzo del 2021.

El trabajo se organizó de manera que cada capítulo tuvo una persona asignada a cargo de la traducción y dos personas que realizaron las revisiones correspondientes. Se buscó que el idioma de las traductoras y revisoras tuviera origen en diferentes países para poder considerar las diferentes y hermosas formas en que hablamos español en todo el mundo. Al finalizar todo el proceso se realizó una edición final del libro en su conjunto.

Las decisiones que tomamos durante el proceso de traducción se basaron en experiencias previas del equipo y en otras guías de traducciones colaborativas al español como R para Ciencia de Datos y The Carpentries:

La variedad dialéctica del español (castellano) utilizada en la traducción corresponde a Latinoamérica y se utilizó una voz conversacional en lugar de una voz formal o académica.

Decidimos intentar ajustar la redacción para evitar la marca de género, pero en caso de no poder evitar su uso, decidimos utilizar lenguaje no sexista que implica el uso del femenino y masculino privilegiando la agilidad y fluidez del texto, que el mismo se entienda y que sea claro el mensaje. Para que haya coherencia a lo largo del texto y mostrar que no hay una determinada jerarquía alternamos el uso del femenino/masculino o masculino/femenino entre capítulos y el uso fue consistente durante todo el capítulo.

También decidimos buscar las versiones al español de referencias como entradas en Wikipedia y lecciones de The Carpentries. En caso que no existieran se dejaron las versiones en inglés.

Finalmente, se decidió cambiar algunos ejemplos a realidades más regionales, para que sean más cercanos a la región de origen de la mayoría de las traductoras.

Quienes trabajamos en este proyecto somos (en orden alfabético): Laura Acion, Mónica Alonso, Zulemma Bazurto, Alejandra Bellini, Yanina Bellini Saibene, Juliana Benitez Saldivar, Lupe Canaviri Maydana, Silvia Canelón, Ruth Chirinos, Paola Corrales, María Dermit, Ana Laura Diedrich, Patricia Loto, Priscilla Minotti, Natalia Morandeira, Lucía Rodríguez Planes, Paloma Rojas, Yuriko Sosa, Natalie Stroud, Yara Terrazas-Carafa y Roxana Noelía Villafañe.

En cada capítulo encontrarás el detalle de las personas que estuvieron a cargo de traducirlo y revisarlo.

La coordinación del trabajo estuvo a cargo de Yanina Bellini Saibene y la edición final a cargo de Yanina Bellini Saibene y Natalia Morandeira.

Malena Zabalegui nos aconsejó sobre el uso de lenguaje no sexista e inclusivo para la realización de esta traducción y Francisco Etchart diseñó el hex sticker.

También generamos un glosario y diccionario bilingüe de términos de educación y tecnología a partir del glosario del libro y del listado de términos a traducir (o no) del libro. El desarrollo de este glosario estuvo a cargo de Yanina Bellini Saibene utilizando glosario.

Todos los detalles del proceso de traducción se pueden consultar en la documentación del proyecto.

Como citar este trabajo

Puedes citar este trabajo de esta manera:

Wilson, G. (2021). Enseñar tecnología en comunidad. Cómo crear y dar lecciones que funcionen y construir una comunidad docente a su alrededor [Teaching Tech Together. How to create and deliver lessons that work and build a teaching community around them] (Traducción al español: Y. Bellini Saibene, N. S. Morandeira, P. Corrales, L. Acion, M. Dermit, Y. Sosa, J. Benitez Saldivar, Z. Bazurto, S. Canelón, L. Canaviri Maydana, M. Alonso, A. Bellini, P. Minotti, R. Chirinos, P. Rojas, N. Stroud, R. N. Villafañe, P. Loto, A. L. Diedrich, Y. Terrazas-Carafa & L. Rodríguez Planes). https://teachtogether.tech/es/ (Trabajo original publicado en 2019).

Este estilo de cita está basado en la sección Book, republished in translation de la guía para citar trabajos de traducciones de APA. Incluimos el titulo en Inglés, siguiendo esta sugerencia de APA (ver ejemplo para Piaget (1950)).

Introducción

Natalia Morandeira Yanina Bellini Saibene y Zulemma Bazurto

En todo el mundo han surgido comunidades de práctica para enseñar programación, diseño web, robótica y otras habilidades a estudiantes free-range.1. Estos grupos existen para que la gente no tenga que aprender estas cosas por su cuenta, pero, irónicamente, sus fundadoras/es y docentes están muchas veces enseñándose a sí mismas/os cómo enseñar.

Hay una forma más conveniente. Así como conocer un par de cuestiones básicas sobre gérmenes y nutrición te puede ayudar a permanecer saludable, conocer un par de cosas sobre psicología cognitiva, diseño instruccional, inclusión y organización comunitaria te puede ayudar a aumentar tu efectividad como docente. Este libro presenta ideas clave que puedes usar inmediatamente, explica por qué creemos que son ciertas y te señala otros recursos que te ayudarán a profundizar tus conocimientos.

Re-uso

Algunas secciones de este libro fueron originalmente creadas para el programa de entrenamiento de instructores/as de Software Carpentry. Cualquier parte del libro puede ser libremente distribuida y re-utilizada bajo la licencia Creative Commons Atribución-NoComercial 4.0 (Anexo 16). Puedes usar la versión online disponible en http://teachtogether.tech/es/ en cualquier clase (gratuita o paga), y puedes citar pequeños extractos bajo el criterio de uso justo, pero no puedes re-publicar largos fragmentos en trabajos comerciales sin permiso previo.

Las contribuciones, correcciones y sugerencias son bienvenidas, y cada vez que una nueva versión del libro sea publicada se les agradecerá a quienes contribuyan. Por favor consulta el Anexo 18 para detalles sobre como contribuir y el Anexo 17 para conocer nuestro código de conducta.

Quién eres

La Sección 6.1 explica cómo averiguar quiénes son tus estudiantes. Los cuatro tipos de personas a las que está destinado este libro son docentes usuarias/os finales: la enseñanza no es su ocupación primaria, tienen poco o ningún conocimiento sobre pedagogía y posiblemente trabajan fuera de clases institucionales.

- Emilia

está entrenada como bibliotecaria y actualmente trabaja como diseñadora web y gestora de proyectos en una pequeña empresa consultora. En su tiempo libre, ayuda a impartir clases de diseño para mujeres que ingresan a la tecnología como una segunda carrera. Se encuentra reclutando colegas para dar más clases en su área y quiere saber cómo preparar lecciones que otras personas puedan usar, a la par de hacer crecer una organización de enseñanza voluntaria.

- David

es un programador profesional, cuyos dos hijos adolescentes asisten a una escuela que no ofrece clases de programación. Se ha ofrecido como voluntario para dirigir un club de programación mensual después del horario de clases. A pesar de que expone presentaciones frecuentemente a sus colegas, no tiene experiencia de enseñanza en el aula. Quiere aprender a enseñar cómo construir lecciones efectivas en un tiempo razonable, y le gustaría aprender más acerca de los pros y contras de las clases en línea en las que cada asistente cursa a su propio ritmo.

- Samira

es una estudiante de robótica, que está considerando ser docente luego de graduarse. Quiere ayudar a sus pares en los talleres de robótica de fin de semana, pero nunca ha enseñado en una clase antes, y en gran medida siente el síndrome de la impostora. Quiere aprender más acerca de educación en general para decidir si la enseñanza es para ella y también está buscando sugerencias específicas que la ayuden a dar lecciones de forma más efectiva.

- René

es docente de ciencias de la computación en una universidad. Ha estado enseñando cursos de grado sobre sistemas operativos por seis años y cada vez se convence más de que tiene que haber una mejor manera de enseñar. El único entrenamiento disponible a través del centro de enseñanza y aprendizaje de su universidad es sobre publicar tareas y enviar evaluaciones en el sistema en línea de gestión del aprendizaje, por lo que quiere descubrir qué otro entrenamiento podría pedir.

Estas personas tienen una variedad de conocimientos técnicos previos y alguna experiencia previa con la enseñanza, pero carecen de entrenamiento formal en enseñanza, diseño de lecciones u organización comunitaria. La mayoría trabaja con estudiantes free-range y están enfocadas en adolescentes y personas adultas más que en niñas/os; todas estas personas tienen tiempo y recursos limitados. Esperamos que nuestro cuarteto use este material de la siguiente manera:

- Emilia

tomará parte de un grupo de lectura semanal en línea con sus voluntarias.

- David

va a abordar parte de este libro en un taller de un día durante un fin de semana y estudiará el resto por su cuenta.

- Samira

usará este libro en un curso de grado de un semestre que incluirá tareas, un proyecto y un examen final.

- René

leerá el libro por su cuenta en su oficina o mientras viaja en el transporte público, deseando entretanto que las universidades hagan más para apoyar la enseñanza de alta calidad.

Qué otras cosas leer

Si tienes prisa o quieres tener una idea de lo que cubrirá este libro, [Brow2018] presenta diez sugerencias basadas en evidencias para enseñar computación2. También puedes disfrutar3:

El entrenamiento para instructoras/es de The Carpentries (Las/los carpinteras/os), en el cual está basado este libro.

[Lang2016] y [Hust2012], que son textos cortos y accesibles, que conectan las cosas que puedes aplicar inmediatamente con la investigación que hay detrás de ellas y las fundamenta.

[Berg2012,Lemo2014,Majo2015,Broo2016,Rice2018,Wein2018b] están repletos de sugerencias prácticas sobre cosas que puedes hacer en tu clase, pero pueden cobrar más sentido una vez que tengas un marco conceptual para entender por qué sus ideas funcionan.

[DeBr2015], quien plantea qué cosas son ciertas sobre la educación al explicar qué cosas no lo son y [Dida2016], que fundamenta la teoría del aprendizaje en psicología cognitiva.

[Pape1993], que continúa siendo una visión inspiradora sobre cómo las computadoras pueden cambiar la educación. La excelente descripción de Amy Ko es una síntesis de las ideas de Papert, mejor que la que podría hacer yo, y [Craw2010] es una compañía provocadora y estimulante a ambos textos.

[Gree2014,McMi2017,Watt2014] explican por qué tantos intentos de reformas educativas han fallado a lo largo de los últimos cuarenta años, cómo colegas que trabajan solo por dinero han explotado y exacerbado la desigualdad en nuestra sociedad, y cómo la tecnología repetidamente ha fracasado en revolucionar la educación.

[Brow2007] y [Mann2015], porque no puedes enseñar bien sin cambiar el sistema en el que enseñamos y no puedes lograr este cambio trabajando de forma solitaria.

Quienes deseen material más académico pueden encontrar gratificante leer a [Guzd2015a,Hazz2014,Sent2018,Finc2019,Hpl2018], mientras que el blog de Mark Guzdial ha sido consistentemente informativo, sugerente y motivador.

Agradecimientos

Este libro no existiría sin las contribuciones de Laura Acion, Jorge Aranda, Mara Averick, Erin Becker, Yanina Bellini Saibene, Azalee Bostroem, Hugo Bowne-Anderson, Neil Brown, Gerard Capes, Francis Castro, Daniel Chen, Dav Clark, Warren Code, Ben Cotton, Richie Cotton, Karen Cranston, Katie Cunningham, Natasha Danas, Matt Davis, Neal Davis, Mark Degani, Tim Dennis, Paul Denny, Michael Deutsch, Brian Dillingham, Grae Drake, Kathi Fisler, Denae Ford, Auriel Fournier, Bob Freeman, Nathan Garrett, Mark Guzdial, Rayna Harris, Ahmed Hasan, Ian Hawke, Felienne Hermans, Kate Hertweck, Toby Hodges, Roel Hogervorst, Mike Hoye, Dan Katz, Christina Koch, Shriram Krishnamurthi, Katrin Leinweber, Colleen Lewis, Dave Loyall, Paweł Marczewski, Lenny Markus, Sue McClatchy, Jessica McKellar, Ian Milligan, Ernesto Mirt, Julie Moronuki, Lex Nederbragt, Aleksandra Nenadic, Jeramia Ory, Joel Ostblom, Nicolás Palopoli, Elizabeth Patitsas, Aleksandra Pawlik, Sorawee Porncharoenwase, Emily Porta, Alex Pounds, Thomas Price, Danielle Quinn, Ian Ragsdale, Erin Robinson, Rosario Robinson, Ariel Rokem, Pat Schloss, Malvika Sharan, Florian Shkurti, Dan Sholler, Juha Sorva, Igor Steinmacher, Tracy Teal, Tiffany Timbers, Richard Tomsett, Preston Tunnell Wilson, Matt Turk, Fiona Tweedie, Martin Ukrop, Anelda van der Walt, Stéfan van der Walt, Allegra Via, Petr Viktorin, Belinda Weaver, Hadley Wickham, Jason Williams, Simon Willison, Karen Word, John Wrenn, y Andromeda Yelton. También estoy agradecido a Lukas Blakk por el logotipo, a Shashi Kumar por la ayuda con LaTeX, a Markku Rontu por hacer que los diagramas se vean mejor, y a toda aquella persona que ha usado este material a lo largo de los años. Cualquier error que permanezca es mío.

Ejercicios

Cada capítulo finaliza con una variedad de ejercicios que incluyen un formato sugerido y cuánto tiempo toma usualmente hacerlos en persona. Muchos pueden ser usados en otros formatos —en particular, si estás avanzando en este libro por tu cuenta, puedes hacer muchos de los ejercicios que son destinados a grupos— y siempre puedes dedicar más tiempo a un ejercicio que el que sugiero.

Si estás usando este material en un taller de formación docente, puedes darles los siguientes ejercicios a quienes participen, con uno o dos días de anticipación, para que tengan una idea de quiénes son y cuál es la mejor manera en que les puedes ayudar. Por favor lee las advertencias en la Sección 9.4 antes de hacer estos ejercicios.

Altos y bajos (clase completa/5’)

Escribe respuestas breves a las siguientes preguntas y compártelas con tus pares. (Si estás tomando notas colaborativas en línea como se describe en la Sección 9.7, puedes escribir tus respuestas allí.)

¿Cuál es la mejor clase o taller que alguna vez hayas tomado? ¿Qué la hacía tan buena?

¿Cuál fue la peor? ¿Qué la hacía tan mala?

Conócete (clase completa/5’)

Comparte respuestas breves a las siguientes preguntas con tus pares. Guarda tus respuestas para que puedas regresar a ellas como referencia a la par que avanzas en el estudio de este libro.

¿Qué es lo que más quieres enseñar?

¿A quiénes tienes más ganas de enseñarles?

¿Por qué quieres enseñar?

¿Cómo sabrás si estás enseñando bien?

¿Qué es lo que más ansías aprender acerca de enseñanza y aprendizaje?

¿Qué cosa específica crees que es cierta acerca de enseñanza y aprendizaje?

¿Por qué aprender a programar? (individual/20’)

Las/los políticas/os, líderes de negocios y educadoras/es usualmente dicen que la gente debe aprender a programar porque los trabajos del futuro lo requerirán. Sin embargo, como Benjamin Doxtdator ha señalado, muchas de estas afirmaciones están construidas sobre cimientos débiles. Incluso si las afirmaciones fueran reales, la educación no debería preparar a la gente para los trabajos del futuro: les debería dar el poder de decidir qué tipos de trabajos hay y asegurarles que vale la pena hacer esos trabajos. Además, como señala Mark Guzdial, hay realmente muchas razones para aprender a programar:

Para entender nuestro mundo.

Para estudiar y entender procesos.

Para ser capaz de hacer preguntas sobre las cosas que influyen en nuestras vidas.

Para usar una importante nueva forma de alfabetización.

Para tener una nueva manera de aprender arte, música, ciencia y matemática.

Como una habilidad laboral.

Para usar mejor las computadoras.

Como un medio en el cual aprender resolución de problemas.

Dibuja una grilla de 3 × 3 cuyos ejes estén etiquetados: “baja,” “media,” y “alta” y coloca cada razón en un sector de acuerdo a la importancia que tienen para ti (el eje X) y para la gente a la que planeas enseñar (el eje Y).

¿Qué puntos están estrechamente alineados en importancia (es decir, en la diagonal de tu grilla)?

¿Qué puntos están desalineados (es decir, en las esquinas por fuera de la diagonal)?

¿Cómo debería afectar esto lo que tú enseñas?

Modelos mentales y evaluación formativa

Natalia Morandeira Ruth Chirinos y Alejandra Bellini

La primera tarea en la enseñanza es descifrar quiénes son tus estudiantes. Nuestra aproximación está basada en el trabajo de investigadores/as como Patricia Benner, quien estudió cómo las personas progresan de novatas a expertas en la carrera de enfermería [Benn2000]. Benner identificó cinco etapas de desarrollo cognitivo que la mayor parte de la gente atraviesa de forma bastante consistente. Para nuestros propósitos, simplificamos esta evolución en tres etapas:

- Personas novatas

no saben qué es lo que no saben, es decir, aún no tienen un modelo mental utilizable del dominio del problema.

- Practicantes competentes

tienen un modelo mental que es adecuado para los propósitos diarios. Pueden llevar a cabo tareas normales con un esfuerzo normal bajo circunstancias normales y tienen algún entendimiento de los límites de su conocimiento (es decir, saben lo que no saben).

- Personas expertas

tienen modelos mentales que incluyen excepciones y casos especiales, los cuales les permiten manejar situaciones que están por fuera de lo ordinario. Discutiremos sobre la experiencia o pericia en más detalle en el Capítulo 3.

Entonces, ¿qué es un modelo mental? Como el nombre lo sugiere, es una representación simplificada de las partes más importantes de algún dominio del problema; que a pesar de ser simplificada es suficientemente buena para permitir la resolución del problema. Un ejemplo es el modelo molecular de bolas y varillas que se usa en las clases de química de la escuela. Los átomos no son en realidad bolas y las uniones atómicas no son en realidad varillas, pero el modelo permite a la gente razonar sobre los componentes químicos y sus reacciones. Un modelo más sofisticado de un átomo es aquel con una bola pequeña en el centro (el núcleo) rodeada de electrones orbitantes. También es incorrecto, pero la complejidad extra permite explicar más y resolver más problemas. (Como con el software, los modelos mentales nunca están finalizados: simplemente son utilizados.)

Presentar a personas novatas un montón de hechos es contraproducente porque aún no tienen un modelo dónde ubicarlos. Incluso, apresurarse a presentar demasiados hechos puede reforzar el modelo mental incorrecto que han improvisado. Como observó [Mull2007a] en un estudio sobre video-instrucción para estudiantes de ciencia:

Los/las estudiantes tienen ideas existentes acerca de… los fenómenos antes de ver un video. Si el video presenta… conceptos de una forma clara, bien ilustrada, los/las estudiantes creen que están aprendiendo, pero no se involucran con el video en un nivel suficientemente profundo como para darse cuenta de que lo que se les ha presentado difiere de sus conocimientos previos… Sin embargo, hay esperanza. Se ha demostrado que el aprendizaje aumenta al presentar en un video … los conceptos erróneos comunes de los/las estudiantes junto con los… conceptos a enseñar, ya que aumenta el esfuerzo mental que los/las estudiantes realizan mientras miran el video.

Tu objetivo cuando enseñes a personas novatas debe ser, por lo tanto, ayudarlas a construir un modelo mental para que tengan algún lugar en el que ordenar los hechos. Por ejemplo, la lección sobre la consola Unix de Software Carpentry (carpinteros/as de software, en español) introduce quince comandos en tres horas. Eso sería un comando cada doce minutos, lo que parece muy lento hasta que te das cuenta de que el propósito real de la lección no es enseñar esos quince comandos: es enseñar las rutas de acceso, la historia de comandos, el autocompletado con el tabulador, los comodines, los pipes los argumentos de la línea de comando y las redirecciones. Los comandos específicos no tienen sentido hasta que las personas novatas entienden estos conceptos; una vez que lo hacen, pueden empezar a leer manuales, pueden buscar las palabras claves correctas en la web, y pueden decidir si los resultados de sus búsquedas son útiles o no.

Las diferencias cognitivas entre personas novatas y practicantes competentes apuntalan las diferencias entre dos tipos de materiales educativos. Un tutorial ayuda a construir un modelo mental a quienes recién llegan a un determinado campo; un manual, por otro lado, ayuda a practicantes competentes a llenar los baches de su conocimiento. Los tutoriales frustran a los/las practicantes competentes porque avanzan demasiado lento y dicen cosas que son obvias (aunque no son para nada obvias para personas novatas). De la misma manera, los manuales frustran a las personas novatas porque usan jergas y no explican las cosas. Este fenómeno se llama el efecto inverso de la experiencia [Kaly2003], y es una de las razones por las que tienes que decidir tempranamente para quiénes son tus lecciones.

Un puñado de excepciones

Una de las razones por las que Unix y C se hicieron populares es que [Kern1978,Kern1983,Kern1988] de alguna manera consiguieron tener buenos tutoriales y buenos manuales al mismo tiempo. [Fehi2008] y [Ray2014] están entre los otros pocos libros de computación que consiguieron esto; incluso luego de releerlos varias veces, no sé cómo lo lograron.

¿Están aprendiendo tus estudiantes?

Mark Twain escribió: “No es lo que no sabes lo que te mete en problemas. Es lo que tienes seguridad de saber y simplemente no es así.” Uno de los ejercicios al construir un modelo mental es, por lo tanto, despejar las cosas que no pertenecen al modelo. En sentido amplio, los conceptos erróneos de las personas novatas caen en tres categorías:

- Errores fácticos

como creer que Río de Janeiro es la capital de Brasil (es Brasilia). Estos errores generalmente son fáciles de corregir.

- Modelos rotos

como creer que el movimiento y la aceleración deben estar en la misma dirección. Podemos lidiar con estos errores haciendo que las personas novatas razonen a través de ejemplos en los que sus modelos den una respuesta incorrecta.

- Creencias fundamentales

como por ejemplo “el mundo solo tiene algunos miles de años de antigüedad” o “algunos tipos de personas son naturalmente mejores en programación que otros” [Guzd2015b,Pati2016]. Estos errores están generalmente conectados profundamente con la identidad social del estudiante, por lo que resisten a las evidencias y racionalizan las contradicciones.

La gente aprende más rápido cuando los/las docentes identifican y aclaran los conceptos erróneos de sus estudiantes mientras se está dando la lección. Esto se llama evaluación formativa porque forma (o le da forma a) la enseñanza mientras se está llevando a cabo. Los/las estudiantes no aprueban o reprueban una evaluación formativa. En cambio, la evaluación formativa da, tanto a quien enseña como a quien aprende, retroalimentación sobre qué tan bien les está yendo y en qué se deberían enfocar en los próximos pasos. Por ejemplo, un/una docente de música le puede pedir a un/una estudiante que toque una escala muy lentamente para chequear su respiración. Entonces, el/la estudiante averigua si la respiración es correcta, mientras que el/la docente recibe una devolución sobre si la explicación que acaba de dar fue comprendida.

En resumen

El contrapunto de la evaluación formativa es la evaluación sumativa, que tiene lugar al final de la lección. La evaluación sumativa es como un examen de conducir: le dice a quien está aprendiendo a conducir si ha dominado el tópico y a quien le está enseñando si su lección ha sido exitosa. Una forma de pensar la diferencia entre los dos tipos de evaluaciones es realizando una analogía con la cocina: quien prueba la comida mientras cocina está haciendo evaluaciones formativas, mientras quien es comensal y prueba la comida cuando se le sirve está haciendo una evaluación sumativa. Desafortunadamente, la escuela ha entrenado a la mayoría de la gente para creer que toda evaluación es sumativa, es decir, que si algo se siente como un examen, resolverlo pobremente le jugaría en contra. Hacer que las evaluaciones formativas se sientan informales reduce esta ansiedad; en mi experiencia, usar cuestionarios en línea, o donde deba hacerse click, o cualquier cosa semejante parece aumentar la ansiedad, ya que hoy en día la mayoría de la gente cree que todo lo que hace en internet está siendo mirado y grabado.

Para ser útil durante la enseñanza, una evaluación formativa debe ser rápida de administrar (de manera que no rompa el flujo de la lección) y debe tener una respuesta correcta no ambigua (de manera que pueda ser usada en grupos). Probablemente, el tipo de evaluación formativa que más ampliamente se usa es la pregunta de opción múltiple. Muchos/as docentes tienen una mala opinión sobre ellas, pero cuando las preguntas de opción múltiple están bien diseñadas pueden revelar mucho más si alguien sabe o no algunos hechos específicos. Por ejemplo, si estás enseñando a niños/as cómo hacer sumas de números con múltiples dígitos [Ojos2015] y les das esta pregunta de opción múltiple:

¿Cuánto es 37 + 15?

a) 52

b) 42

c) 412

d) 43

La respuesta correcta es 52, pero las otras respuestas proporcionan información valiosa:

Si el/la niño/a elige 42, no entiende qué significa “llevarse” una unidad. (Podría escribir 12 como respuesta a 7+5, pero luego reemplazaría el 1 con el 4 que obtiene de la suma de 3+1.)

Si elige 412, está tratando a cada columna de números como un problema separado. Esto sigue siendo incorrecto, pero es incorrecto por un motivo distinto.

Si elige 43, entonces sabe que tiene que llevarse el 1, pero lo lleva de vuelta a la columna de la que viene. De nuevo, es un error distinto que requiere de una explicación clarificadora diferente por parte de quien enseña.

Cada una de estas respuestas incorrectas es un distractor plausible con poder diagnóstico. Un distractor es una respuesta incorrecta o peor que la mejor respuesta; “plausible” significa que parece que podría ser correcta, mientras que “poder diagnóstico” significa que cada uno de los distractores ayuda al/a la docente a darse cuenta de qué explicar a continuación a estudiantes puntuales.

La variedad de respuestas a una evaluación formativa te guía cómo continuar. Si una cantidad suficiente de la clase tiene la respuesta correcta, avanzas. Si la mayoría de la clase elige la misma respuesta incorrecta, deberías retroceder y trabajar en corregir el concepto erróneo que ese distractor señala. Si las respuestas de la clase se dividen equitativamente entre varias opciones, probablemente están arriesgando, entonces deberías retroceder y re-explicar la idea de una manera distinta. (Repetir exactamente la misma explicación probablemente no será útil, lo cual es uno de los motivos por los que tantos cursos por video son pedagógicamente ineficientes.)

¿Qué pasa si la mayoría de la clase vota por la respuesta correcta pero un grupo pequeño vota por las incorrectas? En ese caso, tienes que decidir si deberías destinar tiempo a que la minoría entienda o si es más importante mantener a la mayoría cautivada. No importa cuán duro trabajes o qué prácticas de enseñanza uses, no siempre vas a conseguir darle a todo tu curso lo que necesita; es tu responsabilidad como docente tomar la decisión.

¿De dónde vienen las respuestas incorrectas?

Para diseñar distractores plausibles, piensa en las preguntas que tus estudiantes hicieron o en los problemas que tuvieron la última vez que enseñaste esa temática. Si no la has enseñado antes, piensa en tus propios conceptos erróneos, pregúntale a colegas sobre sus experiencias o busca la historia de tu campo temático: si las demás personas han tenido los mismos malentendidos sobre la temática cincuenta años atrás, hay chances de que la mayoría de tus estudiantes aún malentiendan la temática de la misma forma al día de hoy. También puedes hacer preguntas abiertas en clase para recoger los conceptos erróneos sobre los temas que vas a abarcar en una clase posterior, o consultar sitios de preguntas y respuestas como Quora o Stack Overflow para ver con qué se confunden quienes aprenden la temática en cualquier otro lugar.

Desarrollar evaluaciones formativas mejora tus lecciones porque te fuerza a pensar en los modelos mentales de tus estudiantes. En mi experiencia, al pensar evaluaciones formativas automáticamente escribo la lección de forma de abarcar los baches y errores más probables. Las evaluaciones formativas, por lo tanto, dan buenos resultados incluso si no son utilizadas (aunque la enseñanza es más efectiva cuando sí se utilizan).

Las preguntas de opción múltiple no son el único tipo de evaluación formativa: el Capítulo 12 describe otros tipos de ejercicios que son rápidos y no son ambiguos. Cualquiera sea la evaluación que escojas, deberías hacer alguna que tome un minuto o dos cada 10–15 minutos de manera de asegurarte de que tus estudiantes están realmente aprendiendo. Este ritmo no está basado en un límite de atención intrínseco: [Wils2007] encontró poca evidencia a favor de la afirmación usualmente repetida de que los/las estudiantes solo pueden prestar atención durante 10–15 minutos. En cambio, la guía asegura que, si un número significativo de personas se ha quedado atrás, solo tienes que repetir una pequeña porción de la lección. Las evaluaciones formativas frecuentes también mantienen el compromiso de tus estudiantes, particularmente si se involucran en una discusión en un grupo pequeño (Sección 9.2).

Las evaluaciones formativas también pueden ser usadas antes de las lecciones. Si comienzas una clase con una pregunta de opción múltiple y toda la clase la contesta correctamente, puedes evitar explicar algo que tus estudiantes ya saben. Este tipo de enseñanza activa te da más tiempo para enfocarte en las cosas que tus estudiantes no saben. También le muestra a tus estudiantes que respetas su tiempo lo suficiente como para no desperdiciarlo, lo que ayuda a la motivación (Capítulo 10).

Inventario de conceptos

Con una cantidad de datos suficiente, las preguntas de opción múltiple pueden ser sorprendentemente precisas. El ejemplo más conocido es el inventario del concepto de fuerza [Hest1992], que evalúa la comprensión sobre los mecanismos básicos newtonianos. Al entrevistar a un gran número de participantes, correlacionando sus conceptos erróneos con los patrones de respuestas correctas e incorrectas, así como mejorando las preguntas, los creadores de este inventario construyeron una herramienta de diagnóstico que permite identificar conceptos erróneos específicas. Las personas que investigan pueden utilizar dicha herramienta para medir el efecto de los cambios en los métodos de enseñanza [Hake1998]. Tew y colaboradores desarrollaron y validaron una evaluación independiente del lenguaje para programación introductoria [Tew2011]; [Park2016] la replicaron y [Hamo2017] está desarrollando un inventario de conceptos sobre la recursividad. Sin embargo, es muy costoso construir herramientas de este tipo y su validez está cada vez más amenazada por la habilidad de los/las estudiantes para buscar respuestas en línea.

Desarrollar evaluaciones formativas en una clase solo requiere un poco de preparación y práctica. Puedes darles a tus estudiantes tarjetas coloreadas o numeradas para que respondan una pregunta de opción múltiple simultáneamente (en lugar de que tengan que levantar sus manos en turnos), incluyendo como una de las opciones “No tengo idea” y alentando que hablen un par de segundos con sus pares más cercanos antes de responder. Todas estas prácticas te ayudarán a garantizar que el flujo de enseñanza no sea interrumpido. La Sección 9.2 describe un método de enseñanza poderoso y basado en evidencias, construido a partir de estas simples ideas.

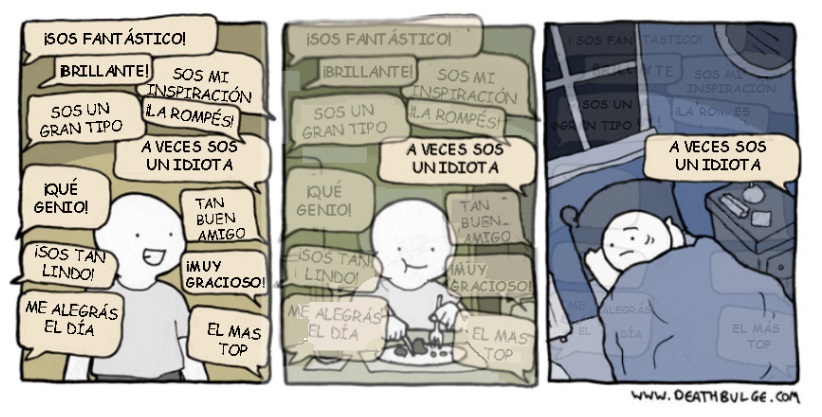

Humor

Los/las docentes a veces incluyen respuestas supuestamente tontas en las preguntas de opción múltiple, como “¡mi nariz!”, particularmente en los cuestionarios destinados a estudiantes jóvenes. Sin embargo, estas respuestas no proveen ninguna idea sobre los conceptos erróneos de los/las estudiantes y la mayoría de la gente no las encuentran graciosas. Como regla, deberías solo incluir un chiste en una lección si lo encuentras gracioso la tercera vez que lo relees.

Las evaluaciones formativas de una lección deberían preparar a los/las estudiantes para una evaluación sumativa: nadie debería encontrar nunca una pregunta en un examen para la cual la enseñanza no lo/la ha preparado. Esto no significa que nunca debes incluir nuevos tipos de problemas en un examen, pero, si lo haces, deberías haber ejercitado de antemano a tus estudiantes para abordar problemas nuevos. El Capítulo 6 explora este punto en profundidad.

Máquina nocional

El término pensamiento computacional está muy extendido, en parte porque la gente coincide en que es importante aún cuando con el mismo término se suele referir a cosas muy distintas. En vez de discutir qué incluye y qué no incluye el término, es más útil pensar en la máquina nocional que quieres que tus estudiantes entiendan [DuBo1986]. De acuerdo a [Sorv2013], una máquina nocional:

es una abstracción idealizada del hardware de computadora y de otros aspectos de los entornos de ejecución de los programas;

permite describir la semántica de los programas; y

refleja correctamente qué hacen los programas cuando son ejecutados.

Por ejemplo, mi máquina nocional para Python es:

Ejecutar programas en el momento en la memoria, la cual se divide en la pila de llamadas y la cola de montículo (heap en inglés).

La memoria para los datos siempre es asignada desde la cola del montículo.

Cada conjunto de datos se almacena en una estructura de dos partes. La primera parte dice de qué tipo de datos se trata y la segunda parte es el valor real.

Booleanos, números y caracteres de texto nunca son modificados una vez que se crean.

Las listas, conjuntos y otras colecciones almacenan referencias a otros datos en lugar de almacenar estos valores directamente. Pueden ser modificados una vez que se crean, es decir, una lista puede ser ampliada o nuevos valores pueden ser agregados a un conjunto.

Cuando un código se carga a la memoria, Python lo convierte a una secuencia de instrucciones que son almacenadas como cualquier otro tipo de dato. Este es el motivo por el que es posible asignar funciones a variables y luego pasarlas como parámetros.

Cuando un código es ejecutado, Python sigue las instrucciones paso a paso, haciendo lo que cada instrucción le indica de a una por vez.

Algunas instrucciones hacen que Python lea datos, haga cálculos y cree nuevos datos. Otras, controlan qué instrucciones va a ejecutar, como los bucles y condicionales; también hay instrucciones que le indican a Python que llame a una función.

Cuando se llama a una función, Python coloca un nuevo marco de pila en la pila de llamadas.

Cada marco de pila almacena los nombres de las variables y las referencias a los datos. Los parámetros de las funciones son simplemente otro tipo de variable.

Cuando una variable es utilizada, Python la busca en el marco de la pila superior Si no la encuentra allí, busca en el último marco en la memoria global.

Cuando la función finaliza, Python la borra del marco de la pila y vuelve a las instrucciones que estaba ejecutando antes de llamar a la función. Si no encuentra un “antes,” el programa ha finalizado.

Uso esta versión caricaturizada de la realidad siempre que enseño Python. Después de 25 horas de instrucción y 100 horas de trabajar por su cuenta, espero que la mayor parte del grupo tenga un modelo mental que incluya todas o la mayoría de estas características.

Ejercicios

Tus modelos mentales (pensar-parejas-compartir/15’)

¿Cuál es el modelo mental que usas para entender tu trabajo? Escribe unas pocas oraciones para describirlo y hazle una devolución a tu pareja sobre su modelo mental. Una vez que has hecho esto en pareja, algunas pocas personas de la clase compartirán sus modelos con el grupo completo. ¿Está todo el grupo de acuerdo sobre qué es un modelo mental? ¿Es posible dar una definición precisa?, ¿o el concepto es útil justamente porque es difuso?

Síntomas de ser una persona novata (toda la clase/5’)

Decir que las personas novatas no tienen un modelo mental de un dominio particular no es equivalente a decir que no tienen ningún modelo mental. Las personas novatas tienden a razonar por analogía y arriesgan conjeturas: toman prestado fragmentos y partes de modelos mentales de otros dominios que superficialmente parecen similares.

La gente que hace esto generalmente dice cosas que ni siquiera son falsas. Como clase, discutan qué otros síntomas tiene una persona novata. ¿Qué dice o hace una persona para llevarte a clasificarla como novata en algún dominio?

Modelar modelos mentales de las personas novatas (parejas/20’)

Crea una pregunta de opción múltiple relacionada a un tópico que has enseñado o pretendas enseñar y explica el poder diagnóstico de cada uno de sus distractores (es decir, qué concepto erróneo pretende ser identificado con cada distractor).

Una vez que hayas finalizado, intercambia las preguntas de opción múltiple con tu pareja. ¿Son sus preguntas ambiguas? ¿Son los conceptos erróneos plausibles? ¿Los distractores realmente evalúan esos conceptos erróneos? ¿Hay otros posibles conceptos erróneos que no sean evaluados?

Pensar en las cosas (toda la clase/15’)

Una buena evaluación formativa requiere que la gente piense profundamente en un problema. Por ejemplo, imagina que has colocado un bloque de hielo en un recipiente y luego llenas de agua este recìpiente, hasta el borde. Cuando el hielo se derrite, ¿el nivel de agua aumenta (de manera que el recipiente rebasa)?, ¿el nivel de agua baja?, ¿o se mantiene igual (Figura 2.1)?

La solución correcta es que el nivel del recipiente permanece igual: el hielo desplaza a su propio peso en el agua, por lo que completa exactamente el “agujero” que ha dejado al derretirse. Para darse cuenta del porqué la gente construye un modelo de la relación entre el peso, el volumen y la densidad [Epst2002].

Describe otra evaluación formativa que conozcas o hayas utilizado, alguna que consideres que lleve a los/las estudiantes a pensar profundamente en algo, y por lo tanto ayude a identificar los defectos en sus razonamientos.

Cuando hayas finalizado, explícale tu ejemplo a otra persona de tu grupo y dale una devolución sobre su ejemplo.

Una progresión diferente (individual/15’)

El modelo de desarrollo de habilidades de persona novata-competente-experta es a veces llamado modelo Dreyfus. Otra progresión comúnmente utilizada es el modelo de las cuatro etapas de la competencia:

- Incompetencia inconsciente:

la persona no sabe lo que no sabe.

- Incompetencia consciente:

la persona se da cuenta de que no sabe algo.

- Competencia consciente:

la persona ha aprendido cómo hacer algo, pero solo lo puede hacer mientras mantiene su concentración y quizás aún deba dividir la tarea en varios pasos.

- Competencia inconsciente:

la habilidad se ha transformado en una segunda naturaleza y la persona puede realizarla reflexivamente.

Identifica una temática en la que te encuentres en cada uno de los niveles de desarrollo de habilidades. En la materia que enseñas, ¿en qué nivel están usualmente la mayoría de tus estudiantes? ¿Qué nivel estás tratando que alcancen? ¿Cómo se relacionan estas cuatro etapas con la clasificación persona novata-competente-experta?

¿Qué tipo de computación? (individual/10’)

[Tedr2008] resume tres tradiciones en computación:

- Matemática:

Los programas son la encarnación de los algoritmos. Son correctos o incorrectos, así como más o menos eficientes.

- Científica:

Los programas son modelos de procesos de información más o menos adecuados que pueden ser estudiados usando el método científico.

- Ingenieril:

Los programas son objetos que se construyen, tales como los diques y los aviones, y son más o menos efectivos y confiables.

¿Cuál de estas tradiciones coincide mejor con tu modelo mental de la computación? Si ninguna de ellas coincide, ¿qué modelo tienes?

Explicar por qué no (parejas/5’)

Una persona de tu curso piensa que hay algún tipo de diferencia entre el texto que tipea carácter por carácter y el texto idéntico que copia y pega. Piensa en una razón por la que tu estudiante puede creer esto o en algo que pueda haber sucedido para darle esa impresión. Luego, simula ser esa persona mientras tu pareja te explica por qué no es así. Intercambia roles con tu pareja y vuelve a probar.

Tu modelo ahora (toda la clase/5’)

Como clase, creen una lista de elementos clave de tu modelo mental de enseñanza. ¿Cuáles son los seis conceptos más importantes y cómo se relacionan?

Tus máquinas nocionales (grupos pequeños/20’)

En grupos pequeños, escriban una descripción de la máquina nocional que quieren que sus estudiantes usen para entender cómo corren sus programas. ¿En qué difiere una máquina nocional para un lenguaje basado en bloques como Scratch de la máquina nocional para Python? ¿Y en qué difiere de una máquina nocional para hojas de cálculo o para un buscador que está interpretando HTML4 y CSS5 cuando renderiza una página web?

Disfrutar sin aprender (individual/5’)

Muchos estudios han mostrado que las evaluaciones de la enseñanza no se correlacionan con los resultados de la enseñanza [Star2014,Uttl2017], es decir, cuán alto sea el puntaje del grupo de estudiantes en un curso no predice cuánto recuerdan. ¿Alguna vez has disfrutado de una clase en la que en realidad no has aprendido nada? Si la respuesta es sí, ¿qué hizo que disfrutes esa clase?

Revisión

Pericia y memoria

Mónica Alonso Natalia Morandeira y Silvia Canelón

La memoria es el remanente del pensamiento.

— Daniel Willingham, Por qué a las/los estudiantes no les gusta la escuela (Why Students Don’t Like School)

El capítulo anterior explicaba las diferencias entre personas novatas y practicantes competentes. En este capítulo se aborda la pericia: qué es, cómo se puede adquirir, y cómo puede ser tanto perjudicial como de ayuda. Luego haremos una introducción sobre uno de los límites más importantes en el aprendizaje y analizaremos cómo dibujar nuestros modelos mentales nos puede ayudar a convertir el conocimiento en lecciones.

Para empezar, ¿a qué nos referimos cuando decimos que alguien es una persona experta? La respuesta habitual es que puede resolver problemas mucho más rápido que la persona que es “simplemente competente”, o que puede reconocer y entender casos donde las reglas normales no se pueden aplicar. Es más, de alguna manera una persona experta hace que parezca que resolver ciertos problemas no requiere esfuerzo alguno: en muchos casos, parece saber la respuesta correcta de un vistazo [Parn2017].

Pericia es más que solo conocer más hechos: las/los practicantes competentes pueden memorizar una gran cantidad de trivialidades sin mejorar notablemente sus desempeños. En cambio, imagina por un momento que almacenamos conocimiento como una red o grafo en el cual los hechos son nodos y las relaciones son arcos6. La diferencia clave entre personas expertas y practicantes competentes es que los modelos mentales de las personas expertas están mucho más densamente conectados, es decir, es más probable que conozcan una conexión entre dos hechos cualesquiera.

La metáfora del grafo explica por qué ayudar a tus estudiantes a hacer conexiones es tan importante como presentarles los hechos: sin esas conexiones la gente no puede recordar y usar aquello que sabe. Esta metáfora también explica varios aspectos observados del comportamiento experto:

Las personas expertas pueden saltar directamente de un problema a una solución porque realmente existe una conexión directa entre ambas cuestiones en sus mentes. Mientras una/un practicante competente debería razonar A → B → C → D → E, una persona experta puede ir de A a E en un único paso. Esto lo llamamos intuición: en vez de razonar su camino hacia una solución, la persona experta reconoce una solución de la misma manera que reconocería una cara familiar.

Los grafos densamente conectados son también la base para la representación fluida de las personas expertas, es decir, sus habilidades para cambiar una y otra vez entre distintas formas de ver un problema [Petr2016]. Por ejemplo, tratando de resolver un problema en matemáticas, una persona experta puede cambiar entre abordarlo de manera geométrica a representarlo como un conjunto de ecuaciones.

Esta metáfora también explica por qué las personas expertas son mejores en diagnósticos que las/los practicantes competentes: mayor cantidad de conexiones entre hechos hace más fácil razonar hacia atrás, de síntomas a causas. (Esta es la razón por la cual durante una entrevista de trabajo de desarrollo de software es preferible pedirle a las/los candidatas/os que depuren un programa a pedirles que programen: da una impresión más precisa de su habilidad.)

Finalmente, las personas expertas están muchas veces tan familiarizadas con su tema que no pueden imaginarse cómo es no ver el mundo de esa manera. Esto implica que muchas veces están menos capacitadas para enseñar un tema que aquellas personas con menos experiencia, pero que aún recuerdan cómo lo aprendieron.

El último de estos puntos se llama punto ciego de las personas expertas. Como se definió originalmente en [Nath2003], es la tendencia de las personas expertas a organizar una explicación de acuerdo a los principios fundamentales del tema, en lugar de guiarse por aquello que ya conocen quienes están aprendiendo. Esto se puede superar con entrenamiento, pero es parte de la razón por la cual no hay correlación entre lo bien que investiga alguien en un área y lo bien que esa misma persona enseña la temática [Mars2002].

La letra S

Las personas expertas a menudo caen en sus puntos ciegos usando la palabra “solo,” como en, “Oh, es fácil, solo enciendes una nueva máquina virtual y luego solo instalas estos cuatro parches a Ubuntu y luego solo reescribes todo tu programa en un lenguaje funcional puro.” Como discutimos en el Capítulo 10, hacer esto indica que quien habla piensa que el problema es trivial y por lo tanto la persona que lucha con el problema debe ser estúpida: entonces, no tengas esta actitud.

Mapas conceptuales

La herramienta que elegimos para representar el modelo mental de alguien es un mapa conceptual, en el cual los hechos son burbujas y las conexiones son relaciones etiquetadas. Como ejemplos, la Figura 3.1 muestra por qué la Tierra tiene estaciones (de IHMC), y el Anexo 22 presenta mapas conceptuales de una biblioteca desde tres puntos de vista distintos.

Para mostrar cómo pueden ser usados los mapas conceptuales para enseñar programación, considera este bucle for en Python:

for letra in "abc":

print(letra)cuya salida es:

a

b

cLas tres “cosas” claves en este bucle se muestran al principio de la Figura 3.2, pero son solo la mitad de la historia. La versión ampliada en la parte derecha de la figura muestra las relaciones entre esas cosas, las cuales son tan importantes para la comprensión como los conceptos en sí mismos.

Los mapas conceptuales pueden ser usados de varias maneras:

- Para ayudar a docentes a descubrir qué están tratando de enseñar.

Un mapa conceptual separa el contenido del orden: en nuestra experiencia, las personas rara vez terminan enseñando las cosas en el orden en que las dibujaron por primera vez.

- Para mejorar la comunicación entre quienes diseñan las lecciones

Si dos docentes tienen ideas muy diferentes de aquello que están tratando de enseñar, es probable que arrastren a sus estudiantes en diferentes direcciones. Dibujar y compartir mapas conceptuales puede ayudar a prevenirlo. Y sí: personas diferentes pueden tener mapas conceptuales diferentes para el mismo tema, pero el mapeo conceptual hace explícitas estas diferencias.

- Para mejorar la comunicación con estudiantes.

Si bien es posible dar a tus estudiantes un mapa pre-dibujado al inicio de la lección para que puedan anotar, es mejor dibujarlo parte por parte mientras se está enseñando, para reforzar la relación entre lo que muestra el mapa y lo que tú dices. Volveremos a esta idea en la Sección 4.1.

- Para evaluación.

Hacer que las/los estudiantes dibujen lo que creen que acaban de aprender le muestra a quien enseña lo que se pasó por alto y lo que se comunicó mal. Revisar los mapas conceptuales de estudiantes insume demasiado tiempo para utilizarlo como una evaluación formativa durante las clases, pero es muy útil en clases semanales una vez que tus estudiantes están familiarizadas/os con la técnica. La calificación es necesaria porque cualquier manera nueva de hacer algo, inicialmente enlentece a la gente—si quien está aprendiendo intenta encontrarle el sentido a la programación básica, pedirle que se imagine cómo esquematizar sus pensamientos al mismo tiempo es una carga que no conviene realizar.

Algunas/os docentes son escépticas/os a que las personas novatas puedan mapear efectivamente lo que entendieron, dado que la introspección y la explicación de lo entendido son generalmente habilidades más avanzadas que la comprensión en sí misma. Por ejemplo, [Kepp2008] observó el uso del mapeo conceptual en la enseñanza de computación. Uno de los hallazgos fue que “… el mapeo conceptual es problemático para muchas/os estudiantes porque evalúa la comprensión personal en lugar del conocimiento que simplemente se aprendió de memoria.” Como alguien que valora la comprensión sobre el conocimiento de memoria, lo considero un beneficio.

Comienza por cualquier lugar

Cuando se pide por primera vez dibujar un mapa conceptual, muchas personas no saben por dónde empezar. Si esto ocurre, escribe dos palabras asociadas con el tema que estás tratando de mapear, luego dibuja una línea entre ellas y agrega una etiqueta explicando cómo estas dos ideas están relacionadas. Puedes entonces preguntarte qué otras cosas están relacionadas en el mismo sentido, qué partes tienen esas cosas, o qué sucede antes o después con los conceptos que ya están en la hoja a fin de descubrir más nodos y arcos. Después de eso, casi siempre la parte más difícil está terminada.

Los mapas conceptuales son solo una forma de representar nuestro conocimiento de un tema [Eppl2006]; otros incluyen diagramas de Venn, diagramas de flujo y árboles de decisión [Abel2009]. Todos estos esquemas externalizan la cognición, es decir, hacen visibles los modelos mentales de manera que pueden ser comparados y combinados7.

Trabajo crudo y honestidad

Muchas/os diseñadoras/es de interfaces de usuaria/o creen que es mejor mostrar bocetos de sus ideas en lugar de maquetas pulidas, porque es más probable que las personas den una opinión honesta sobre algo que presumen que tiene pocos minutos de elaboración. Si parece que el trabajo requirió horas, la mayoría de las personas suavizará sus críticas. Al dibujar mapas conceptuales para motivar un intercambio de ideas, deberías entonces usar lápices y papel borrador (o marcadores y una pizarra) en lugar de sofisticadas herramientas de dibujo por computadora.

Siete más o menos dos

Mientras el modelo gráfico de conocimiento es incorrecto pero útil, otro modelo simple tiene bases fisiológicas profundas. Como una aproximación rápida, la memoria humana se puede dividir en dos capas distintas. La primera, llamada memoria a largo plazo o memoria persistente, es donde almacenamos cosas como los nombres de nuestra gente amiga, nuestra dirección, y lo que hizo un payaso en nuestra fiesta de cumpleaños de ocho que nos asustó mucho. La capacidad de esta capa de memoria es esencialmente ilimitada, pero es de acceso lento—demasiado lento para ayudarnos a lidiar con leones hambrientos y familiares descontentos.

La evolución entonces nos ha dado un segundo sistema llamado memoria a corto plazo o memoria de trabajo. Es mucho más rápida, pero también más pequeña: [Mill1956] estimó que la memoria de trabajo del adulto promedio solo podía contener 7 ± 2 elementos a la vez. Esta es la razón por la cual los números de teléfono son de 7 u 8 dígitos de longitud: antes los teléfonos tenían un disco en vez de teclado y esa era la cadena de números más larga que la mayoría de los adultos podía recordar con precisión durante el tiempo que tardaba el disco en girar varias veces.

Participación

El tamaño de la memoria de trabajo a veces se usa para explicar por qué los equipos deportivos tienden a formarse con aproximadamente media docena de miembros o se separan en sub-grupos como delanteras/os y línea de tres cuartos en rugby. También se usa para explicar por qué las reuniones solo son productivas hasta un cierto número de participantes: si veinte personas tratan de discutir algo, o bien se arman tres reuniones al mismo tiempo o media docena de personas hablan mientras los demás escuchan. El argumento es que la habilidad de las personas para llevar registro de sus pares está limitada al tamaño de la memoria de trabajo, pero hasta donde sé, esta aseveración jamás fue probada.

7±2 es simplemente el número más importante al enseñar. Quien enseña no puede colocar información directamente en la memoria a largo plazo de una/un estudiante. En cambio, cualquier cosa que presente se almacena primero en la memoria a corto plazo de cada estudiante y solo se transfiere a la memoria a largo plazo después que ha sido mantenida ahí y ensayada (Sección 5.1). Si quien enseña presenta demasiados contenidos y muy rápidamente, la vieja información no llega a transferirse a tiempo antes de ser desplazada por la nueva información.

Esta es una de las maneras de usar mapas conceptuales al diseñar una lección: sirve para asegurarse que la memoria a corto plazo de tus estudiantes no estará sobrecargada. Con el mapa ya diseñado, la/el docente elige un fragmento apropiado para la memoria a corto plazo, el cual llevará a una evaluación formativa (Figura 3.3); en la próxima lección continuará con otro fragmento del mapa conceptual, y así sucesivamente.

Construyendo juntos mapas conceptuales

La próxima vez que tengas una reunión de equipo, entrega a cada persona una hoja de papel y pídeles que pasen unos minutos dibujando sus propios mapas conceptuales sobre el proyecto en el que están trabajando. A la cuenta de tres, haz que todos revelen sus mapas conceptuales al grupo. La discusión que sigue puede ayudar a las personas a comprender por qué han estado tropezando.

Ten en cuenta que el modelo simple de memoria presentado aquí ha sido reemplazado en gran medida por uno más sofisticado, en el que la memoria a corto plazo se divide en varios almacenamientos (p. ej. para memoria visual versus memoria lingüística), cada uno de los cuales realiza un preprocesamiento involuntario [Mill2016a]. Nuestra presentación es entonces un ejemplo de un modelo mental que ayuda al aprendizaje y al trabajo diario.

Reconocimiento de patrones

Investigaciones recientes sugieren que el tamaño real de la memoria a corto plazo podría ser tan bajo como 4±1 elementos [Dida2016]. Para manejar conjuntos de información más grandes, nuestras mentes crean fragmentos. Por ejemplo, en general recordamos a las palabras como elementos simples más que como secuencia de letras. Del mismo modo, el patrón formado por cinco puntos en cartas o dados se recuerda como un todo en lugar de cinco piezas de información separadas.

Las personas expertas tienen más fragmentos y de mayor tamaño que las no-expertas, p.ej.`̀ven” patrones más grandes y tienen más patrones con los que contrastar cosas. Esto les permite razonar a un nivel superior y buscar información de manera más rápida y precisa. Sin embargo, la fragmentación también puede engañarnos si identificamos mal las cosas: quienes recién llegan a veces pueden ver cosas que personas expertas han visto y pasado por alto.

Dada la importancia de la fragmentación para pensar, es tentador identificar patrones de diseño y enseñarlos directamente. Estos patrones ayudan a practicantes competentes a pensar y dialogar en varios dominios (incluida la enseñanza [Berg2012]), pero los catálogos de patrones son demasiado duros y abstractos para que personas novatas les encuentren sentido por su cuenta. Dicho esto, asignar nombres a un pequeño número de patrones parece ayudar con la enseñanza, principalmente dando a tus estudiantes un vocabulario más rico para pensar y comunicarse [Kuit2004,Byck2005,Saja2006]. Volveremos a este tema en la Sección 7.5.

Convirtiéndose en una persona experta

Entonces, ¿cómo se convierte alguien en una persona experta? La idea de que hacen falta diez mil horas de práctica para conseguirlo es ampliamente citada, pero probablemente no sea verdad: hacer lo mismo una y otra vez es más probable que fortalezca los malos hábitos a que mejore la práctica. Lo que realmente funciona es hacer cosas similares pero sutilmente diferentes, prestando atención a qué funciona y qué no, y luego cambiando el comportamiento en respuesta a esta retroalimentación, para así mejorar de forma acumulativa. Esto se llama práctica deliberada o práctica reflectiva, y es común que las personas atraviesen tres etapas:

- Actuar según la devolución de otros.

Las/los estudiantes pueden escribir un ensayo sobre qué hicieron en sus vacaciones de verano y recibir devoluciones de una/un docente que les indique cómo mejorarlo.

- Dar devoluciones sobre el trabajo de otras/os.

Las/los estudiantes pueden realizar críticas de la evolución de un personaje en la novela de Jorge Amado Doña Flor y sus dos maridos. y recibir una devolución de una/un docente sobre esas críticas.

- Darse devoluciones a sí misma/o.

En algún punto, las/los estudiantes empiezan a criticar sus propios trabajos con las habilidades que ya han construido. Dado que autocriticarse es mucho más rápido que esperar los comentarios de otras personas, esta aptitud comenzará a desarrollarse de un momento a otro.

¿Qué cuenta como práctica deliberada?

[Macn2014] descubrió que “…la práctica deliberada explicaba el 26% de la varianza en el rendimiento de los juegos, 21% para música, 18% para deportes, 4% para educación, y menos del 1% para profesiones.” Sin embargo, [Eric2016] criticó este hallazgo diciendo: “Resumir cada hora de cualquier tipo de práctica durante la carrera de un individuo implica que el impacto de todos los tipos de actividad práctica respecto a rendimiento es igual ——una suposición que…es inconsistente con la evidencia.” Para ser efectiva, la práctica deliberada requiere tanto un objetivo de rendimiento claro como una devolución informativa inmediata. Se trata de dos cosas que las/los docentes deberían esforzarse en conseguir.

Ejercicios

Mapear conceptos (parejas/30’)

Dibuja un mapa conceptual sobre algo que puedas enseñar en cinco minutos. Discute con tu colega y critiquen el mapa que cada cual elaboró. ¿Presentan conceptos o detalles superficiales? ¿Cuáles de las relaciones en el mapa de tu colega consideras conceptos y viceversa?

Mapeo de conceptos (nuevamente) (grupos pequeños/20’)

La clase se divide en grupos de 3–4 personas. En cada grupo, cada persona diseña por su cuenta un mapa conceptual que muestre un modelo mental sobre qué sucede en un aula. Cuando todas las personas del grupo hayan terminado, comparen los mapas conceptuales. ¿En qué coinciden y difieren sus modelos mentales?

Mejora de la memoria a corto plazo (individual/5’)

[Cher2007] sugiere que la razón principal por la que las personas dibujan diagramas cuando discuten cosas es para ampliar su memoria a corto plazo: señalar una burbuja dibujada hace unos minutos provoca el recuerdo de varios minutos de debate. Cuando intercambiaste mapas conceptuales en el ejercicio anterior, ¿qué tan fácil fue para otras personas entender lo que significaba tu mapa? ¿qué tan fácil sería para ti si lo dejas de lado por un día o dos y luego lo miras de nuevo?

Eso es un poco autorreferencial, ¿no? (toda la clase/30’)

Trabajando independientemente, dibuja un mapa conceptual sobre mapas conceptuales. Compara tu mapa conceptual con aquellos dibujados por las demás personas. ¿Qué incluyeron la mayoría de las personas? ¿Cuáles fueron las diferencias más significativas?

Notar tus puntos ciegos (grupos pequeños/10’)

Elizabeth Wickes listó todo lo que necesitas saber para entender y leer esta línea de Python:

respuestas = ['tuatara', 'tuataras', 'bus', "lick"]Los corchetes rodeando el contenido significan que estamos trabajando con una lista (a diferencia de los corchetes inmediatamente a la derecha de algo, que es la notación utilizada para extraer datos).

Los elementos se separan por comas fuera de las comillas (si las comas estuvieran dentro de las comillas, sería la cita de un texto).

Cada elemento es una cadena de caracteres, y lo sabemos por las comillas. Aquí podríamos tener números u otro tipo de datos si quisiéramos; necesitamos comillas porque estamos trabajando con cadenas de caracteres.

Estamos mezclando el uso de comillas simples y dobles; a Python no le importa eso siempre que estén balanceadas alrededor de las cadenas individuales (por cada comilla que abre, una comilla que cierre).

A cada coma le sigue un espacio, lo cual no es obligatorio para Python, pero lo preferimos para una lectura más clara.

Cada uno de estos detalles no sería ni percibido por una persona experta. Trabajando en grupos de 3–4 personas, selecciona algo igualmente corto de una lección que hayas enseñado o aprendido y divídelo a este nivel de detalle.

Qué enseñar a continuación (individual/5’)

Vuelve al mapa conceptual para la fotosíntesis de la Figura 3.3. ¿Cuántos conceptos y relaciones hay en los fragmentos seleccionados? ¿Qué incluirías en el próximo fragmento de la lección y por qué?

El poder de fragmentación (individual/5’)

Mira la Figura 3.4 por 10 segundos, luego mira hacia otro lado e intenta escribir tu número de teléfono con estos símbolos8. (Usa un espacio para ’0’.) Cuando hayas terminado, mira la representación alternativa en el Anexo 23. ¿Cuánto más fáciles de recordar son los símbolos cuando el patrón se hace explícito?

Arquitectura cognitiva

Patricia Loto María Dermit y Natalia Morandeira

Hemos hablado acerca de modelos mentales como si fueran cosas reales, pero ¿qué es lo que realmente sucede en el cerebro de una persona cuando está aprendiendo? La respuesta corta es que no lo sabemos, la respuesta larga es que sabemos mucho más que antes. Este capítulo profundizará en qué hace el cerebro mientras el aprendizaje sucede y en cómo podemos aprovecharlo para diseñar y brindar lecciones de manera más efectiva.

¿Qué es lo que sucede allí?

La Figura 4.1 es un modelo simplificado de la arquitectura cognitiva humana. El núcleo de este modelo es la separación entre la memoria a corto y a largo plazo vistas en la Sección 3.2. La memoria a largo plazo es como tu sótano: almacena objetos de forma más o menos permanente pero tu conciencia no puede acceder a ella directamente. En cambio, confías en tu memoria a corto plazo, que es como el escritorio de tu mente.

Cuando necesitas algo, tu cerebro lo rescata de la memoria a largo plazo y lo coloca en la memoria a corto plazo. Por el contrario, la nueva información que llega a la memoria a corto plazo debe codificarse para poder ser almacenada en la memoria a largo plazo. Si esa información no está codificada y almacenada, no se recuerda y esto significa que no se ha aprendido.

La información ingresa a la memoria a corto plazo principalmente a través de tu canal verbal (para el habla) y del canal visual (para las imágenes)9. Si bien la mayoría de las personas confía principalmente en su canal visual, cuando las imágenes y las palabras se complementan entre sí el cerebro hace un mejor trabajo al recordarlas: se codifican juntas, de modo que el recuerdo de una ayuda a activar el recuerdo de la otra.

Las entradas lingüísticas y visuales son procesadas por diferentes partes del cerebro humano y a su vez los recuerdos lingüísticos y visuales son almacenados también de manera separada. Esto significa que correlacionar flujos de información lingüísticos y visuales requiere esfuerzo cognitivo: si alguien lee algo mientras lo escucha en voz alta, su cerebro no puede evitar comprobar que obtiene la misma información por ambos canales.

Por lo tanto, el aprendizaje aumenta cuando la información se presenta de manera simultánea por dos canales diferentes, pero se reduce cuando esa información es redundante, en lugar de ser complementaria: tal fenómeno es conocido como efecto de atención dividida [Maye2003]. Por ejemplo, en general las personas encuentran más difícil aprender de un video que tiene narración y capturas de pantalla que aprender de un video que únicamente tiene narración ó capturas (pero no ambos elementos), porque en el primer caso parte de su atención ha sido utilizada para chequear que la narración y las capturas se correspondan entre sí. Dos notables excepciones son las personas que aún no hablan bien un idioma y las que tienen algún impedimento auditivo u otras necesidades especiales, quienes quizás encuentren que el valor de la información redundante supera el esfuerzo de procesamiento adicional.

Fragmento a fragmento

El efecto de la atención dividida explica por qué es más efectivo dibujar un diagrama fragmento a fragmento mientras enseñas, en lugar de presentar todo el gráfico de una sola vez. Si las partes de un diagrama aparecen al mismo tiempo en que los gráficos son explicados, el/la estudiante correlaciona ambos elementos en su memoria. Así que luego, al enfocarte en una parte del diagrama, es más probable que tu estudiante active la recuperación de lo que fue dicho cuando esa parte fue dibujada.

El efecto de la atención dividida no significa que los/las estudiantes no deberían intentar conciliar múltiples flujos de información entrantes —después de todo, esto es lo que tienen que hacer en el mundo real [Atki2000]—. En cambio, significa que la instrucción no debería solicitar a las personas que lo hagan mientras están incorporando habilidades por primera vez: el uso de múltiples fuentes de información de manera simultánea debe tratarse como una tarea de aprendizaje separada.

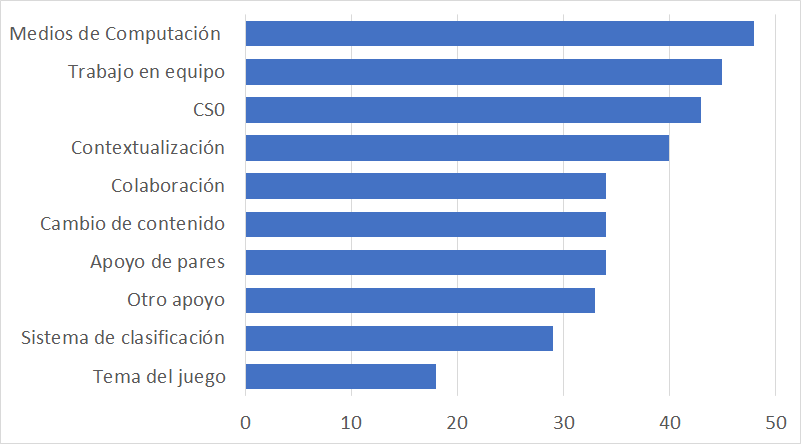

No todos los gráficos son equivalentes

[Sung2012] presenta un elegante estudio que distingue los gráficos seductores (los cuales son altamente interesantes pero no son directamente relevantes al objetivo de la enseñanza), los gráficos decorativos (los cuales son neutros pero no son directamente relevantes al objetivo de la enseñanza), y por último los gráficos instructivos (los cuales sí son directamente relevantes al objetivo de la enseñanza). Los/las estudiantes que recibieron cualquier tipo de gráfico calificaron al material con un mayor puntaje, pero en verdad solo quienes recibieron gráficos instructivos obtuvieron mejores resultados.

Del mismo modo, [Stam2013,Stam2014] descubrió que tener más información en realidad puede disminuir el rendimiento. Les mostraron a niños/as: imágenes, imágenes más números, o simplemente números, para que realicen dos tareas. Para algunos/as niños/as, recibir imágenes o bien imágenes más números fue mejor que recibir únicamente números; pero para otros/as, recibir imágenes superó a recibir imágenes más números, lo que superó a solo tener números.

Carga cognitiva

En [Kirs2006], Kirschner, Sweller y Clark escribieron:

Aunque los enfoques educativos no guiados o mínimamente guiados son muy populares e intuitivamente atractivos…estos enfoques ignoran las estructuras que constituyen la arquitectura cognitiva humana así como la evidencia de estudios empíricos de los últimos cincuenta años. Dichas evidencias indican sistemáticamente que la instrucción guiada mínimamente es menos eficaz y menos eficiente que los enfoques educacionales con un fuerte énfasis en la orientación del proceso de aprendizaje del estudiante. La ventaja de la orientación disminuye sólo cuando los/las estudiantes tienen un conocimiento previo suficientemente elevado para proporcionar una orientación “interna”.

Más allá de la jerga, lo que estos autores afirmaban es que el hecho de que los/las estudiantes hagan sus propias preguntas, establezcan sus propias metas y encuentren su propio camino a través de un tema es menos efectivo que mostrarles cómo hacer las cosas paso a paso. El enfoque “elige tu propia aventura” se conoce como aprendizaje basado en la indagación y es intuitivamente atractivo: después de todo, ¿quién se opondría a tener estudiantes que utilicen su propia iniciativa para resolver problemas del mundo real de forma realista? Sin embargo, pedir a los/las estudiantes que lo hagan en un nuevo dominio es una sobrecarga, ya que les exige que dominen al mismo tiempo el contenido fáctico de un dominio y las estrategias de resolución de problemas. Más específicamente, la teoría de la carga cognitiva propone que las personas tienen que lidiar con tres cosas cuando está aprendiendo:

- Carga intrínseca

es lo que las personas tienen que tener en cuenta para aprender el material nuevo.

- Carga pertinente

es el esfuerzo mental (deseable) requerido para vincular la nueva información con la antigua, que es una de las distinciones entre el aprendizaje y la memorización.

- Carga extrínseca

es cualquier cuestión que distraiga del aprendizaje.

La teoría de la carga cognitiva sostiene que las personas tienen que dividir una cantidad fija de memoria de trabajo entre estas tres cosas. Nuestro objetivo como docentes es maximizar la memoria disponible para manejar la carga intrínseca, lo cual significa reducir la carga pertinente en cada paso y eliminar la carga extrínseca.

Problemas de Parsons

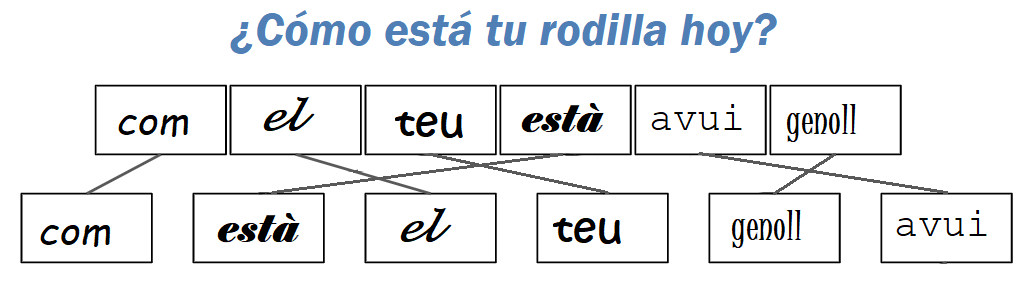

Un tipo de ejercicio que puede ser explicado en términos de carga cognitiva se utiliza a menudo en la enseñanza de idiomas. Supongamos que le pides a alguien que traduzca la frase “¿Cómo está tu rodilla hoy?” de castellano a catalán. Para resolver el problema, necesitan recordar tanto el vocabulario como la gramática, que es una carga cognitiva doble. Si, en lugar de traducir desde cero, les pides que pongan “com”, “està”, “el”, “teu”, “genoll” y “avui” en el orden correcto, les permites que se centren únicamente en el aprendizaje de la gramática. Sin embargo, si escribes estas palabras en seis fuentes o colores diferentes, has aumentado la carga cognitiva extrínseca, porque involuntariamente (y posiblemente de manera inconsciente) invertirán algo de esfuerzo tratando de averiguar si las diferencias entre las palabras son significativas de acuerdo a sus colores (Figura 4.2).

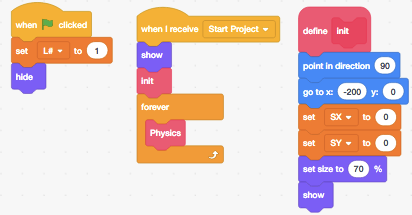

El equivalente en programación de este ejemplo se llama problema de Parsons10 [Pars2006].

Cuando enseñes a programar, puedes darles a tus estudiantes las líneas de código que necesitan para resolver un problema y pedirles que las ordenen en el orden correcto. Esto les permite concentrarse en el flujo de control y en las dependencias de datos, sin distraerse con la denominación de las variables o tratando de recordar qué funciones llamar. Múltiples estudios han demostrado que los problemas de Parsons demandan menos tiempo de resolución y producen resultados educativos equivalentes [Eric2017].

Ejemplos desvanecidos (o con espacios en blanco)

Otro tipo de ejercicio que se puede explicar en términos de carga cognitiva es dar a tus estudiantes una serie de ejemplos desvanecidos (faded examples en inglés). El primer ejemplo de una serie presenta un uso completo de una estrategia particular de resolución de problemas. El siguiente problema es del mismo tipo, pero tiene algunas lagunas que tu estudiante debe llenar. Cada problema sucesivo da menos andamiaje (scaffolding en inglés), hasta que se pide resolver un problema completo desde cero. Al enseñar álgebra en la escuela secundaria, por ejemplo, podríamos comenzar con esto:

| (4x + 8)/2 | = | 5 |

| 4x + 8 | = | 2 * 5 |

| 4x + 8 | = | 10 |

| 4x | = | 10 - 8 |

| 4x | = | 2 |

| x | = | 2 / 4 |

| x | = | 1 / 2 |

y luego pedir que los/las estudiantes resuelvan esto:

| (3x - 1)*3 | = | 12 |

| 3x - 1 | = | _ / _ |

| 3x - 1 | = | 4 |

| 3x | = | _ |

| x | = | _ / 3 |

| x | = | _ |

y esto:

| (5x + 1)*3 | = | 4 |

| 5x + 1 | = | _ |

| 5x | = | _ |

| x | = | _ |

y, finalmente, esto:

| (2x + 8)/4 | = | 1 |

| x | = | _ |

Un ejercicio similar para enseñar Python podría comenzar mostrando a estudiantes cómo encontrar la longitud total de una lista de palabras:

# largo_total(["rojo", "verde", "azul"]) => 12

define largo_total(lista_de_palabras):

total = 0

for palabra in lista_de_palabras:

total = total + length(palabra)

return totaly luego pidiendo que llenen los espacios en blanco en este otro código (lo que centra su atención en las estructuras de control):

# largo_palabra(["rojo", "verde", "azul"]) => [3, 5, 4]

define largo_palabra(lista_de_palabras):

lista_de_longitudes = []

for ____ in ____:

append(lista_de_longitudes, ____)

return lista_de_longitudesEl siguiente problema podría ser este (que centra su atención en actualizar el resultado final):

# juntar_todo(["rojo", "verde", "azul"]) => "rojoverdeazul"

define juntar_todo(lista_de_palabras):

palabras_unidas = ____

for ____ in ____:

____

return palabras_unidasFinalmente, los/las estudiantes tendrán que escribir una función completa por su cuenta:

# generar_acronimo(["rojo", "verde", "azul"]) => "RVA"

define generar_acronimo(lista_de_palabras):

____Los ejemplos desvanecidos funcionan porque presentan la estrategia de resolución de problemas fragmento por fragmento. En cada paso, los/las estudiantes tienen un nuevo problema que abordar, lo cual es menos intimidante que una pantalla en blanco o una hoja de papel en blanco (Sección 9.11). También anima a que los/las estudiantes piensen en las similitudes y diferencias entre varios enfoques, lo que ayuda a crear los vínculos en sus modelos mentales y de ese modo facilita la recuperación de la información.

La clave para construir un buen ejemplo desvanecido es pensar en la estrategia de resolución de problemas que se pretende enseñar. Por ejemplo, los problemas de programación sobre todo utilizan el patrón de diseño acumulativo, en el que los resultados del procesamiento de elementos de una colección se agregan repetidamente a una sola variable de alguna manera para crear el resultado final.

Aprendizaje cognitivo

Un modelo alternativo de aprendizaje e instrucción que también usa andamiaje y desvanecimiento es el aprendizaje cognitivo, que enfatiza la forma en que un/a docente transmite habilidades y conocimientos a un/a estudiante. El/la docente proporciona modelos de desempeño y resultados, luego entrena a las personas novatas explicando qué están haciendo y por qué [Coll1991,Casp2007]. El/la estudiante reflexiona sobre su propia resolución de problemas, por ejemplo, pensando en voz alta o criticando su propio trabajo, y finalmente explora problemas de su propia elección.

Este modelo nos dice que los/las docentes deben presentar varios ejemplos al explicar una nueva idea para que los/las estudiantes puedan ver qué generalizar, y que deben variar la forma del problema para dejar en claro cuáles son y cuáles no son características superficiales11. Los problemas deben presentarse en contextos del mundo real, y debemos fomentar la autoexplicación para ayudar a los/las estudiantes a organizarse y dar sentido a lo que se les acaba de enseñar (Sección 5.1).

Sub-objetivos etiquetados

Etiquetar sub-objetivos significa dar nombre a los pasos en una descripción paso a paso de un proceso de resolución de problemas. [Marg2016,Morr2016] descubrieron que al etiquetar los subobjetivos, los/las estudiantes resolvían mejor los problemas de Parsons, y se observa el mismo beneficio en otros dominios [Marg2012]. Volviendo al ejemplo de Python usado anteriormente, los objetivos secundarios para encontrar la longitud total de una lista de palabras o construir un acrónimo son:

Crea un valor vacío del tipo a obtener.

A partir de la variable del bucle, obtén el valor que se agregará al resultado.

Actualiza el resultado con ese valor.

Etiquetar subobjetivos funciona porque agrupar los pasos relacionados en fragmentos con nombre (Sección 3.2) ayuda a tus estudiantes a distinguir lo que es genérico de lo que es específico del problema en cuestión. También les ayuda a construir un modelo mental de ese tipo de problema, de modo que luego pueden resolver otros problemas de ese tipo, y les da una oportunidad natural para la autoexplicación (Sección 5.1).

Manuales mínimos