Teaching Tech Together

How to create and deliver lessons that work

and build a teaching community around them

Taylor & Francis, 2019, 978-0-367-35328-5

Dedication

For my mother, Doris Wilson,

who taught hundreds of children to read and to believe in themselves.

And for my brother Jeff, who did not live to see it finished.

"Remember, you still have a lot of good times in front of you."

All royalties from the sale of this book are being donated to

the Carpentries,

a volunteer organization that teaches

foundational coding and data science skills

to researchers worldwide.

The Rules

Be kind: all else is details.

Remember that you are not your learners…

…that most people would rather fail than change…

…and that ninety percent of magic consists of knowing one extra thing.

Never teach alone.

Never hesitate to sacrifice truth for clarity.

Make every mistake a lesson.

Remember that no lesson survives first contact with learners…

…that every lesson is too short for the teacher and too long for the learner…

…and that nobody will be more excited about the lesson than you are.

Introduction

Grassroots groups have sprung up around the world to teach programming, web design, robotics, and other skills to free-range learners. These groups exist so that people don’t have to learn these things on their own, but ironically, their founders and teachers are often teaching themselves how to teach.

There’s a better way. Just as knowing a few basic facts about germs and nutrition can help you stay healthy, knowing a few things about cognitive psychology, instructional design, inclusivity, and community organization can help you be a more effective teacher. This book presents key ideas you can use right now, explains why we believe they are true, and points you at other resources that will help you go further.

Re-Use

Parts of this book were originally created for the Software Carpentry instructor training program, and all of it can be freely distributed and re-used under the Creative Commons Attribution-NonCommercial 4.0 license (Appendix 16). You can use the online version at http://teachtogether.tech/ in any class (free or paid), and can quote short excerpts under fair use provisions, but cannot republish large parts in commercial works without prior permission.

Contributions, corrections, and suggestions are all welcome, and all contributors will be acknowledged each time a new version is published. Please see Appendix 18 for details and Appendix 17 for our code of conduct.

Who You Are

Section 6.1 explains how to figure out who your learners are. The four that this book is for are all end-user teachers: teaching isn’t their primary occupation, they have little or no background in pedagogy, and they may work outside institutional classrooms.

- Emily

trained as a librarian and now works as a web designer and project manager in a small consulting company. In her spare time she helps run web design classes for women entering tech as a second career. She is now recruiting colleagues to run more classes in her area, and wants to know how to make lessons others can use and grow a volunteer teaching organization.

- Moshe

is a professional programmer with two teenage children whose school doesn’t offer programming classes. He has volunteered to run a monthly after-school programming club, and while he frequently gives presentations to colleagues, he has no classroom experience. He wants to learn how to build effective lessons in reasonable time, and would like to know more about the pros and cons of self-paced online classes.

- Samira

is an undergraduate in robotics who is thinking about becoming a teacher after she graduates. She wants to help at weekend robotics workshops for her peers, but has never taught a class before and feels a lot of impostor syndrome. She wants to learn more about education in general in order to decide if it’s for her, and is also looking for specific tips to help her deliver lessons more effectively.

- Gene

is a professor of computer science. They have been teaching undergraduate courses on operating systems for six years, and increasingly believe that there has to be a better way. The only training available through their university’s teaching and learning center is about posting assignments and submitting grades in the online learning management system, so they want to find out what else they should be asking for.

These people have a variety of technical backgrounds and some previous teaching experience, but no formal training in teaching, lesson design, or community organization. Most work with free-range learners and are focused on teenagers and adults rather than children; all have limited time and resources. We expect our quartet to use this material as follows:

- Emily

will take part in a weekly online reading group with her volunteers.

- Moshe

will cover part of this book in a one-day weekend workshop and study the rest on his own.

- Samira

will use this book in a one-semester undergraduate course with assignments, a project, and a final exam.

- Gene

will read the book on their own in their office or while commuting, wishing all the while that universities did more to support high-quality teaching.

What to Read Instead

If you are in a hurry or want a taste of what this book will cover, [Brow2018] presents ten evidence-based tips for teaching computing. You may also enjoy:

The Carpentries instructor training, from which this book is derived.

[Lang2016] and [Hust2012], which are short and approachable, and which connect things you can do right now to the research that backs them.

[Berg2012,Lemo2014,Majo2015,Broo2016,Rice2018,Wein2018b] are all full of practical suggestions for things you can do in your classroom, but may make more sense once you have a framework for understanding why their ideas work.

[DeBr2015], which explains what’s true about education by explaining what isn’t, and [Dida2016], which grounds learning theory in cognitive psychology.

[Pape1993], which remains an inspiring vision of how computers could change education. Amy Ko’s excellent description does a better job of summarizing Papert’s ideas than I possibly could, and [Craw2010] is a thought-provoking companion to both.

[Gree2014,McMi2017,Watt2014] explain why so many attempts at educational reform have failed over the past forty years, how for-profit colleges are exploiting and exacerbating the growing inequality in our society, and how technology has repeatedly failed to revolutionize education.

[Brow2007] and [Mann2015], because you can’t teach well without changing the system in which we teach, and you can’t do that on your own.

Those who want more academic material may also find [Guzd2015a,Hazz2014,Sent2018,Finc2019,Hpl2018] rewarding, while Mark Guzdial’s blog has consistently been informative and thought-provoking.

Acknowledgments

This book would not exist without the contributions of Laura Acion, Jorge Aranda, Mara Averick, Erin Becker, Yanina Bellini Saibene, Azalee Bostroem, Hugo Bowne-Anderson, Neil Brown, Gerard Capes, Francis Castro, Daniel Chen, Dav Clark, Warren Code, Ben Cotton, Richie Cotton, Karen Cranston, Katie Cunningham, Natasha Danas, Matt Davis, Neal Davis, Mark Degani, Tim Dennis, Paul Denny, Michael Deutsch, Brian Dillingham, Grae Drake, Kathi Fisler, Denae Ford, Auriel Fournier, Bob Freeman, Nathan Garrett, Mark Guzdial, Rayna Harris, Ahmed Hasan, Ian Hawke, Felienne Hermans, Kate Hertweck, Toby Hodges, Roel Hogervorst, Mike Hoye, Dan Katz, Christina Koch, Shriram Krishnamurthi, Katrin Leinweber, Colleen Lewis, Dave Loyall, Paweł Marczewski, Lenny Markus, Sue McClatchy, Jessica McKellar, Ian Milligan, Julie Moronuki, Lex Nederbragt, Aleksandra Nenadic, Jeramia Ory, Joel Ostblom, Elizabeth Patitsas, Aleksandra Pawlik, Sorawee Porncharoenwase, Emily Porta, Alex Pounds, Thomas Price, Danielle Quinn, Ian Ragsdale, Erin Robinson, Rosario Robinson, Ariel Rokem, Pat Schloss, Malvika Sharan, Florian Shkurti, Dan Sholler, Juha Sorva, Igor Steinmacher, Tracy Teal, Tiffany Timbers, Richard Tomsett, Preston Tunnell Wilson, Matt Turk, Fiona Tweedie, Martin Ukrop, Anelda van der Walt, Stéfan van der Walt, Allegra Via, Petr Viktorin, Belinda Weaver, Hadley Wickham, Jason Williams, Simon Willison, Karen Word, John Wrenn, and Andromeda Yelton. I am also grateful to Lukas Blakk for the logo, to Shashi Kumar for LaTeX help, to Markku Rontu for making the diagrams look better, and to everyone who has used this material over the years. Any mistakes that remain are mine.

Exercises

Each chapter ends with a variety of exercises that include a suggested format and how long they usually take to do in person. Most can be used in other formats—in particular, if you are going through this book on your own, you can still do many of the exercises that are intended for groups—and you can always spend more time on them than what’s suggested.

If you are using this material in a teacher training workshop, you can give the exercises below to participants a day or two in advance to get an idea of who they are and how best you can help them. Please read the caveats in Section 9.4 before doing this.

Highs and Lows (whole class/5)

Write brief answers to the following questions and share with your peers. (If you are taking notes together online as described in Section 9.7, put your answers there.)

What is the best class or workshop you ever took? What made it so good?

What was the worst one? What made it so bad?

Know Thyself (whole class/10)

Share brief answers to the following questions with your peers. Record your answers so that you can refer back to them as you go through the rest of this book.

What do you most want to teach?

Who do you most want to teach?

Why do you want to teach?

How will you know if you’re teaching well?

What do you most want to learn about teaching and learning?

What is one specific thing you believe is true about teaching and learning?

Why Learn to Program? (individual/20)

Politicians, business leaders, and educators often say that people should learn to program because the jobs of the future will require it. However, as Benjamin Doxtdator pointed out, many of those claims are built on shaky ground. Even if they were true, education shouldn’t prepare people for the jobs of the future: it should give them the power to decide what kinds of jobs there are and to ensure that those jobs are worth doing. And as Mark Guzdial points out, there are actually many reasons to learn how to program:

To understand our world.

To study and understand processes.

To be able to ask questions about the influences on their lives.

To use an important new form of literacy.

To have a new way to learn art, music, science, and mathematics.

As a job skill.

To use computers better.

As a medium in which to learn problem-solving.

Draw a 3 × 3 grid whose axes are labeled “low,” “medium,” and “high” and place each reason in one sector according to how important it is to you (the X axis) and to the people you plan to teach (the Y axis).

Which points are closely aligned in importance (i.e. on the diagonal in your grid)?

Which points are misaligned (i.e. in the off-diagonal corners)?

How should this affect what you teach?

Mental Models and Formative Assessment

The first task in teaching is to figure out who your learners are. Our approach is based on the work of researchers like Patricia Benner, who studied how nurses progress from novice to expert [Benn2000]. Benner identified five stages of cognitive development that most people go through in a fairly consistent way. For our purposes, we will simplify this progression to three stages:

- Novices

don’t know what they don’t know, i.e. they don’t yet have a usable mental model of the problem domain.

- Competent practitioners

have a mental model that’s adequate for everyday purposes. They can do normal tasks with normal effort under normal circumstances, and have some understanding of the limits to their knowledge (i.e. they know what they don’t know).

- Experts

have mental models that include exceptions and special cases, which allows them to handle situations that are out of the ordinary. We will discuss expertise in more detail in Chapter 3.

So what is a mental model? As the name suggests, it is a simplified representation of the most important parts of some problem domain that is good enough to enable problem solving. One example is the ball-and-spring models of molecules used in high school chemistry. Atoms aren’t actually balls, and their bonds aren’t actually springs, but the model enables people to reason about chemical compounds and their reactions. A more sophisticated model of an atom has a small central ball (the nucleus) surrounded by orbiting electrons. It’s also wrong, but the extra complexity enables people to explain more and to solve more problems. (Like software, mental models are never finished: they’re just used.)

Presenting a novice with a pile of facts is counter-productive because they don’t yet have a model to fit those facts into. In fact, presenting too many facts too soon can actually reinforce the incorrect mental model they’ve cobbled together. As [Mull2007a] observed in a study of video instruction for science students:

Students have existing ideas about…phenomena before viewing a video. If the video presents…concepts in a clear, well illustrated way, students believe they are learning but they do not engage with the media on a deep enough level to realize that what is presented differs from their prior knowledge… There is hope, however. Presenting students’ common misconceptions in a video alongside the…concepts has been shown to increase learning by increasing the amount of mental effort students expend while watching it.

Your goal when teaching novices should therefore be to help them construct a mental model so that they have somewhere to put facts. For example, Software Carpentry’s lesson on the Unix shell introduces fifteen commands in three hours. That’s one command every twelve minutes, which seems glacially slow until you realize that the lesson’s real purpose isn’t to teach those fifteen commands: it’s to teach paths, history, tab completion, wildcards, pipes, command-line arguments, and redirection. Specific commands don’t make sense until novices understand those concepts; once they do, they can start to read manual pages, search for the right keywords on the web, and tell whether the results of their searches are useful or not.

The cognitive differences between novices and competent practitioners underpin the differences between two kinds of teaching materials. A tutorial helps newcomers to a field build a mental model; a manual, on the other hand, helps competent practitioners fill in the gaps in their knowledge. Tutorials frustrate competent practitioners because they move too slowly and say things that are obvious (though they are anything but obvious to novices). Equally, manuals frustrate novices because they use jargon and don’t explain things. This phenomenon is called the expertise reversal effect [Kaly2003], and is one of the reasons you have to decide early on who your lessons are for.

A Handful of Exceptions

One of the reasons Unix and C became popular is that [Kern1978,Kern1983,Kern1988] somehow managed to be good tutorials and good manuals at the same time. [Fehi2008] and [Ray2014] are among the very few other books in computing that achieve this; even after re-reading them several times, I don’t know how they pull it off.

Are People Learning?

Mark Twain once wrote, “It ain’t what you don’t know that gets you into trouble. It’s what you know for sure that just ain’t so.” One of the exercises in building a mental model is therefore to clear away things that don’t belong. Broadly speaking, novices’ misconceptions fall into three categories:

- Factual errors

like believing that Vancouver is the capital of British Columbia (it’s Victoria). These are usually simple to correct.

- Broken models

like believing that motion and acceleration must be in the same direction. We can address these by having novices reason through examples where their models give the wrong answer.

- Fundamental beliefs

such as “the world is only a few thousand years old” or “some kinds of people are just naturally better at programming than others” [Guzd2015b,Pati2016]. These errors are often deeply connected to the learner’s social identity, so they resist evidence and rationalize contradictions.

People learn fastest when teachers identify and clear up learners’ misconceptions as the lesson is being delivered. This is called formative assessment because it forms (or shapes) the teaching while it is taking place. Learners don’t pass or fail formative assessment; instead, it gives both the teacher and the learner feedback on how well they are doing and what they should focus on next. For example, a music teacher might ask a learner to play a scale very slowly to check their breathing. The learner finds out if they are breathing correctly, while the teacher gets feedback on whether the explanation they just gave made sense.

Summing Up

The counterpoint to formative assessment is summative assessment, which takes place at the end of the lesson. Summative assessment is like a driver’s test: it tells the learner whether they have mastered the topic and the teacher whether their lesson was successful. One way of thinking about the difference is that a chef tasting food as she cooks it is formative assessments, but the guests tasting it once it’s served is summative.

Unfortunately, school has trained most people to believe that all assessment is summative, i.e. that if something feels like a test, doing poorly will count against you. Making formative assessments feel informal helps reduce this anxiety; in my experience, using online quizzes, clickers, or anything else seems to increase it, since most people today believe that anything they do on the web is being watched and recorded.

In order to be useful during teaching, a formative assessment has to be quick to administer (so that it doesn’t break the flow of the lesson) and have an unambiguous correct answer (so that it can be used with groups). The most widely used kind of formative assessment is probably the multiple choice question (MCQ). A lot of teachers have a low opinion of them, but when they are designed well, they can reveal much more than just whether someone knows specific facts. For example, suppose you are teaching children how to do multi-digit addition [Ojos2015] and you give them this MCQ:

What is 37 + 15?

a) 52

b) 42

c) 412

d) 43

The correct answer is 52, but the other answers provide valuable insights:

If the child chooses 42, she has no understanding of what “carrying” means. (She might well write 12 as the answers to 7+5, then overwrite the 1 with the 4 she gets from 3+1.)

If she chooses 412, she is treating each column of numbers as a separate problem. This is still wrong, but it’s wrong for a different reason.

If she chooses 43 then she knows she has to carry the 1 but is carrying it back into the column it came from. Again, this is a different mistake, and requires a different clarifying explanation from the teacher.

Each of these incorrect answers is a plausible distractor with diagnostic power. A distractor is a wrong or less-than-best answer; “plausible” means that it looks like it could be right, while “diagnostic power” means that each of the distractors helps the teacher figure out what to explain next to that particular learner.

The spread of responses to a formative assessment guides what you do next. If enough of the class has the right answer, you move on. If the majority of the class chooses the same wrong answer, you should go back and work on correcting the misconception that distractor points to. If their answers are evenly split between several options they are probably just guessing, so you should back up and re-explain the idea in a different way. (Repeating exactly the same explanation will probably not be useful, which is one of things that makes so many video courses pedagogically ineffective.)

What if most of the class votes for the right answer but a few vote for wrong ones? In that case, you have to decide whether you should spend time getting the minority caught up or whether it’s more important to keep the majority engaged. No matter how hard you work or what teaching practices you use, you won’t always be able to give everyone what they need; it’s your responsibility as a teacher to make the call.

Where Do Wrong Answers Come From?

In order to come up with plausible distractors, think about the questions your learners asked or problems they had the last time you taught this subject. If you haven’t taught it before, think about your own misconceptions, ask colleagues about their experiences, or look at the history of your field: if everyone misunderstood your subject in some way fifty years ago, the odds are that a lot of your learners will still misunderstand it that way today. You can also ask open-ended questions in class to collect misconceptions about material to be covered in a later class, or check question and answer sites like Quora or Stack Overflow to see what people learning the subject elsewhere are confused by.

Developing formative assessments makes your lessons better because it forces you to think about your learners’ mental models. In my experience, once I do this I automatically write the lesson to cover the most likely gaps and errors. Formative assessments therefore pay off even if they aren’t used (though teaching is more effective if they are).

MCQs aren’t the only kind of formative assessment: Chapter 12 describes other kinds of exercises that are quick and unambiguous. Whatever you pick, you should do something that takes a minute or two every 10–15 minutes to make sure that your learners are actually learning. This rhythm isn’t based on an intrinsic attentional limit: [Wils2007] found little support for the often-repeated claim that learners can only pay attention for 10–15 minutes. Instead, the guideline ensures that if a significant number of people have fallen behind, you only have to repeat a short portion of the lesson. Frequent formative assessments also keep learners engaged, particularly if they involved small-group discussion (Section 9.2).

Formative assessments can also be used before lessons. If you start a class with an MCQ and everyone answers it correctly, you can avoid explaining something that your learners already know. This kind of active teaching gives you more time to focus on things they don’t know. It also shows learners that you respect their time enough not to waste it, which helps with motivation (Chapter 10).

Concept Inventories

Given enough data, MCQs can be made surprisingly precise. The best-known example is the Force Concept Inventory [Hest1992], which assesses understanding of basic Newtonian mechanics. By interviewing a large number of respondents, correlating their misconceptions with patterns of right and wrong answers, and then improving the questions, its creators constructed a diagnostic tool that can pinpoint specific misconceptions. Researchers can then use that tool to measure the effect of changes in teaching methods [Hake1998].

Tew and others developed and validated a language-independent assessment for introductory programming [Tew2011]; [Park2016] replicated it, and [Hamo2017] is developing a concept inventory for recursion. However, it’s very costly to build tools like this, and learners’ ability to search for answers online is an ever-increasing threat to their validity.

Working formative assessments into class only requires a little bit of preparation and practice. Giving learners colored or numbered cards so that they can all answer an MCQ at once (rather than holding up their hands in turn), having one of the options be, “I have no idea,” and encouraging them to talk to their neighbors for a few seconds before answering will all help ensure that your teaching flow isn’t disrupted. Section 9.2 describes a powerful, evidence-based teaching method that builds on these simple ideas.

Humor

Teachers sometimes put supposedly-silly answers like “my nose!” on MCQs, particularly ones intended for younger learners. However, these don’t provide any insight into learners’ misconceptions, and most people don’t actually find them funny. As a rule, you should only include a joke in a lesson if you find it funny the third time you re-read it.

A lesson’s formative assessments should prepare learners for its summative assessment: no one should ever encounter a question on an exam that the teaching did not prepare them for. This doesn’t mean you should never put new kinds of problems on an exam, but if you do, you should have given learners practice tackling novel problems beforehand. Chapter 6 explores this in depth.

Notional Machines

The term computational thinking is bandied about a lot, in part because people can agree it’s important while meaning very different things by it. Rather than arguing over what it does and doesn’t include, it’s more useful to think about the notional machine that you want learners to understand [DuBo1986]. According to [Sorv2013], a notional machine:

is an idealized abstraction of computer hardware and other aspects of programs’ runtime environments;

enables the semantics of programs to be described; and

correctly reflects what programs do when executed.

For example, my notional machine for Python is:

Running programs live in memory, which is divided between a call stack and a heap.

Memory for data is always allocated from the heap.

Every piece of data is stored in a two-part structure. The first part says what type the data is, and the second part is the actual value.

Booleans, numbers, and character strings are never modified after they are created.

Lists, sets, and other collections store references to other data rather than storing those values directly. They can be modified after they are created, i.e. a list can be extended or new values can be added to a set.

When code is loaded into memory, Python converts it to a sequence of instructions that are stored like any other data. This is why it’s possible to assign functions to variables and pass them as parameters.

When code is executed, Python steps through the instructions, doing what each one tells it to in turn.

Some instructions make Python read data, do calculations, and create new data. Other instructions control what instructions Python executes, which is how loops and conditionals work. Yet another instruction tells Python to call a function.

When a function is called, Python pushes a new stack frame onto the call stack.

Each stack frame stores variables’ names and references to data. Function parameters are just another kind of variable.

When a variable is used, Python looks for it in the top stack frame. If it isn’t there, it looks in the bottom (global) frame.

When the function finishes, Python erases its stack frame and jumps backs to the instructions it was executing before the function call. If there isn’t a “before,” the program has finished.

I use this cartoon version of reality whenever I teach Python. After about 25 hours of instruction and 100 hours of work on their own time, I expect most learners to have a mental model that includes most or all of these features.

Exercises

Your Mental Models (think-pair-share/15)

What is one mental model you use to understand your work? Write a few sentences describing it and give feedback on a partner’s. Once you have done that, have a few people share their models with the whole group. Does everyone agree on what a mental model is? Is it possible to give a precise definition, or is the concept useful precisely because it is fuzzy?

Symptoms of Being a Novice (whole class/5)

Saying that novices don’t have a mental model of a particular domain is not the same as saying that they don’t have a mental model at all. Novices tend to reason by analogy and guesswork, borrowing bits and pieces of mental models from other domains that seem superficially similar.

People who are doing this often say things that are not even wrong. As a class, discuss what some other symptoms of being a novice are. What does someone do or say that leads you to classify them as a novice in some domain?

Modelling Novice Mental Models (pairs/20)

Create a multiple choice question related to a topic you have taught or intend to teach and explain the diagnostic power of each its distractors (i.e. what misconception each distractor is meant to identify).

When you are done, trade MCQs with a partner. Is their question ambiguous? Are the misconceptions plausible? Do the distractors actually test for them? Are any likely misconceptions not tested for?

Thinking Things Through (whole class/15)

A good formative assessment requires people to think through a problem. For example, imagine that you have placed a block of ice in a bathtub and then filled the tub to the rim with water. When the ice melts, does the water level go up (so that the tub overflows), go down, or stay the same (Figure 2.1)?

The correct answer is that the level stays the same: the ice displaces its own weight in water, so it exactly fills the “hole” it has made when it melts. Figuring out why helps people build a model of the relationship between weight, volume, and density [Epst2002].

Describe another formative assessment you have seen or used that required people to think something through and thereby identify flaws in their reasoning. When you are done, explain your example to a partner and give them feedback on theirs.

A Different Progression (individual/15)

The novice-competent-expert model of skill development is sometimes called the Dreyfus model. Another commonly-used progression is the four stages of competence:

- Unconscious incompetence:

the person doesn’t know what they don’t know.

- Conscious incompetence:

the person realizes that they don’t know something.

- Conscious competence:

the person has learned how to do something, but can only do it while concentrating and may still need to break things down into steps.

- Unconscious competence:

the skill has become second nature and the person can do it reflexively.

Identify one subject where you are at each level. What level are most of your learners at in the subject you teach most often? What level are you trying to get them to? How do these four stages relate to the novice-competent-expert classification?

What Kind of Computing? (individual/10)

[Tedr2008] summarizes three traditions in computing:

- Mathematical:

Programs are the embodiment of algorithms. They are either correct or incorrect, as well as more or less efficient.

- Scientific:

Programs are more or less accurate models of information processes that can be studied using the scientific method.

- Engineering:

Programs are built objects like dams and airplanes, and are more or less effective and reliable.

Which of these best matches your mental model of computing? If none of them do, what model do you have?

Explaining Why Not (pairs/5)

One of your learners thinks that there is some kind of difference between text that they type in character by character and identical text that they copy and paste. Think of a reason they might believe this or something that might have happened to give them this impression, then pretend to be that learner while your partner explains why this isn’t the case. Trade roles and try again.

Your Model Now (whole class/5)

As a class, create a list of the key elements of your mental model of learning. What are the half-dozen most important concepts and how do they relate?

Your Notional Machines (small groups/20)

Working in small groups, write up a description of the notional machine you want learners to use to understand how their programs run. How does a notional machine for a blocks-based language like Scratch differ from that for Python? What about a notional machine for spreadsheets or for a browser that is interpreting HTML and CSS when rendering a web page?

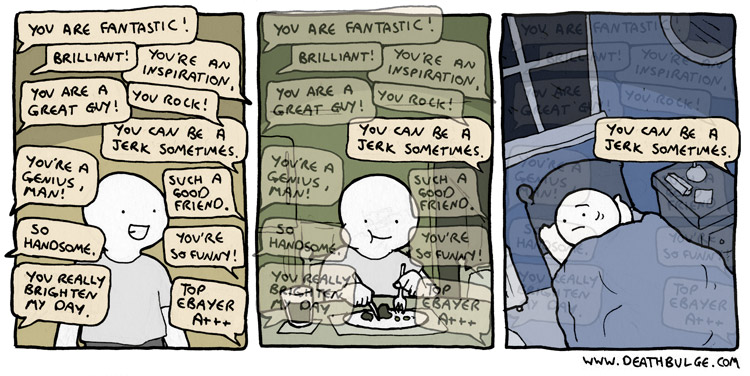

Enjoying Without Learning (individual/5)

Multiple studies have shown that teaching evaluations don’t correlate with learning outcomes [Star2014,Uttl2017], i.e. that how highly learners rate a course doesn’t predict how much they remember. Have you ever enjoyed a class that you didn’t actually learn anything from? If so, what made it enjoyable?

Review

Expertise and Memory

Memory is the residue of thought.

— Daniel Willingham, Why Students Don’t Like School

The previous chapter explained the differences between novices and competent practitioners. This one looks at expertise: what it is, how people acquire it, and how it can be harmful as well as helpful. We then introduce one of the most important limits on learning and look at how drawing pictures of mental models can help us turn knowledge into lessons.

To start, what do we mean when we say someone is an expert? The usual answer is that they can solve problems much faster than people who are “merely competent”, or that they can recognize and deal with cases where the normal rules don’t apply. They also somehow make this look effortless: in many cases, they seem to know the right answer at a glance [Parn2017].

Expertise is more than just knowing more facts: competent practitioners can memorize a lot of trivia without noticeably improving their performance. Instead, imagine for a moment that we store knowledge as a network or graph in which facts are nodes and relationships are arcs1. The key difference between experts and competent practitioners is that experts’ mental models are much more densely connected, i.e. they are more likely to know a connection between any two facts.

The graph metaphor explains why helping learners make connections is as important as introducing them to facts: without those connections, people can’t recall and use what they know. It also explains many observed aspects of expert behavior:

Experts can often jump directly from a problem to a solution because there actually is a direct link between the two in their mind. Where a competent practitioner would have to reason A → B → C → D → E, an expert can go from A to E in a single step. We call this intuition: instead of reasoning their way to a solution, the expert recognizes a solution in the same way that they would recognize a familiar face.

Densely-connected graphs are also the basis for experts’ fluid representations, i.e. their ability to switch back and forth between different views of a problem [Petr2016]. For example, when trying to solve a problem in mathematics, an expert might switch between tackling it geometrically and representing it as a set of equations.

This metaphor also explains why experts are better at diagnosis than competent practitioners: more linkages between facts makes it easier to reason backward from symptoms to causes. (This in turn is why asking programmers to debug during job interviews gives a more accurate impression of their ability than asking them to program.)

Finally, experts are often so familiar with their subject that they can no longer imagine what it’s like to not see the world that way. This means they are often less able to teach the subject than people with less expertise who still remember learning it themselves.

The last of these points is called expert blind spot. As originally defined in [Nath2003], it is the tendency of experts to organize explanation according to the subject’s deep principles rather than being guided by what their learners already know. It can be overcome with training, but it is part of reason there is no correlation between how good someone is at doing research in an area and how good they are at teaching it [Mars2002].

The J Word

Experts often betray their blind spot by using the word “just,” as in, “Oh, it’s easy, you just fire up a new virtual machine and then you just install these four patches to Ubuntu and then you just re-write your entire program in a pure functional language.” As we discuss in Chapter 10, doing this signals that the speaker thinks the problem is trivial and that the person struggling with it must therefore be stupid, so don’t do this.

Concept Maps

Our tool of choice for representing someone’s mental model is a concept map, in which facts are bubbles and connections are labeled connections. As examples, Figure 3.1 shows why the Earth has seasons (from IHMC), and Appendix 22 presents concept maps for libraries from three points of view.

To show how concept maps can be using in teaching programming, consider this for loop in Python:

for letter in "abc":

print(letter)whose output is:

a

b

cThe three key “things” in this loop are shown in the top of Figure 3.2, but they are only half the story. The expanded version in the bottom shows the relationships between those things, which are as important for understanding as the concepts themselves.

Concept maps can be used in many ways:

- Helping teachers figure out what they’re trying to teach.

A concept map separates content from order: in our experience, people rarely wind up teaching things in the order in which they first drew them.

- Aiding communication between lesson designers.

Teachers with very different ideas of what they’re trying to teach are likely to pull their learners in different directions. Drawing and sharing concept maps can help prevent this. And yes, different people may have different concept maps for the same topic, but concept mapping makes those differences explicit.

- Aiding communication with learners.

While it’s possible to give learners a pre-drawn map at the start of a lesson for them to annotate, it’s better to draw it piece by piece while teaching to reinforce the ties between what’s in the map and what the teacher said. We will return to this idea in Section 4.1.

- For assessment.

Having learners draw pictures of what they think they just learned shows the teacher what they missed and what was miscommunicated. Reviewing learners’ concept maps is too time-consuming to do as a formative assessment during class, but very useful in weekly lectures once learners are familiar with the technique. The qualification is necessary because any new way of doing things initially slows people down—if a learner is trying to make sense of basic programming, asking them to figure out how to draw their thoughts at the same time is an unfair load.

Some teachers are also skeptical of whether novices can effectively map their understanding, since introspection and explanation of understanding are generally more advanced skills than understanding itself. For example, [Kepp2008] looked at the use of concept mapping in computing education. One of their findings was that, “…concept mapping is troublesome for many students because it tests personal understanding rather than knowledge that was merely learned by rote.” As someone who values understanding over rote knowledge, I consider that a benefit.

Start Anywhere

When first asked to draw a concept map, many people will not know where to start. When this happens, write down two words associated with the topic you’re trying to map, then draw a line between them and add a label explaining how those two ideas are related. You can then ask what other things are related in the same way, what parts those things have, or what happens before or after the concepts already on the page in order to discover more nodes and arcs. After that, the hard part is often stopping.

Concept maps are just one way to represent our understanding of a subject [Eppl2006]; others include Venn diagrams, flowcharts, and decision trees [Abel2009]. All of these externalize cognition, i.e. make mental models visible so that they can be compared and combined2.

Rough Work and Honesty

Many user interface designers believe that it’s better to show people rough sketches of their ideas rather than polished mock-ups because people are more likely to give honest feedback on something that they think only took a few minutes to create: if it looks as though what they’re critiquing took hours to create, most will pull their punches. When drawing concept maps to motivate discussion, you should therefore use pencils and scrap paper (or pens and a whiteboard) rather than fancy computer drawing tools.

Seven Plus or Minus Two

While the graph model of knowledge is wrong but useful, another simple model has a sounder physiological basis. As a rough approximation, human memory can be divided into two distinct layers. The first, called long-term or persistent memory, is where we store things like our friends’ names, our home address, and what the clown did at our eighth birthday party that scared us so much. Its capacity is essentially unlimited, but it is slow to access—too slow to help us cope with hungry lions and disgruntled family members.

Evolution has therefore given us a second system called short-term or working memory. It is much faster, but also much smaller: [Mill1956] estimated that the average adult’s working memory could only hold 7±2 items at a time. This is why phone numbers are 7 or 8 digits long: back when phones had dials instead of keypads, that was the longest string of numbers most adults could remember accurately for as long as it took the dial to go around several times.

Participation

The size of working memory is sometimes used to explain why sports teams tend to have about half a dozen members or are broken into sub-groups like the forwards and backs in rugby. It is also used to explain why meetings are only productive up to a certain number of participants: if twenty people try to discuss something, either three meetings are going on at once or half a dozen people are talking while everyone else listens. The argument is that people’s ability to keep track of their peers is constrained by the size of working memory, but so far as I know, the link has never been proven.

7±2 is the single most important number in teaching. A teacher cannot place information directly in a learner’s long-term memory. Instead, whatever they present is first stored in the learner’s short-term memory, and is only transferred to long-term memory after it has been held there and rehearsed (Section 5.1). If the teacher presents too much information too quickly, the new information displaces the old before the latter is transferred.

This is one of the ways to use a concept map when designing a lesson: it helps make sure learners’ short-term memories won’t be overloaded. Once the map is drawn, the teacher chooses a subsection that will fit in short-term memory and lead to a formative assessment (Figure 3.3), then adds another subsection for the next lesson episode and so on.

Building Concept Maps Together

The next time you have a team meeting, give everyone a sheet of paper and have them spend a few minutes drawing their own concept map of the project you’re all working on. On the count of three, have everyone reveal their concept maps to their group. The discussion that follows may help people understand why they’ve been tripping over each other.

Note that the simple model of memory presented here has largely been replaced by a more sophisticated one in which short-term memory is broken down into several modal stores (e.g. for visual vs. linguistic memory), each of which does some involuntary preprocessing [Mill2016a]. Our presentation is therefore an example of a mental model that aids learning and everyday work.

Pattern Recognition

Recent research suggests that the actual size of short-term memory might be as low as 4±1 items [Dida2016]. In order to handle larger sets of information, our minds create chunks. For example, most of us remember words as single items rather than as sequences of letters. Similarly, the pattern made by five spots on cards or dice is remembered as a whole rather than as five separate pieces of information.

Experts have more and larger chunks than non-experts, i.e. experts “see” larger patterns and have more patterns to match things against. This allows them to reason at a higher level and to search for information more quickly and more accurately. However, chunking can also mislead us if we mis-identify things: newcomers really can sometimes see things that experts have looked at and missed.

Given how important chunking is to thinking, it is tempting to identify design patterns and teach them directly. These patterns help competent practitioners think and talk to each other in many domains (including teaching [Berg2012]), but pattern catalogs are too dry and too abstract for novices to make sense of on their own. That said, giving names to a small number of patterns does seem to help with teaching, primarily by giving the learners a richer vocabulary to think and communicate with [Kuit2004,Byck2005,Saja2006]. We will return to this in Section 7.5.

Becoming an Expert

So how does someone become an expert? The idea that ten thousand hours of practice will do it is widely quoted but probably not true: doing the same thing over and over again is much more likely to solidify bad habits than improve performance. What actually works is doing similar but subtly different things, paying attention to what works and what doesn’t, and then changing behavior in response to that feedback to get cumulatively better. This is called deliberate or reflective practice, and a common progression is for people to go through three stages:

- Act on feedback from others.

A learner might write an essay about what they did on their summer holiday and get feedback from a teacher telling them how to improve it.

- Give feedback on others’ work.

The learner might critique character development in a Harry Potter novel and get feedback from the teacher on their critique.

- Give feedback to themselves.

At some point, the learner starts critiquing their own work as they do it using the skills they have now built up. Doing this is so much faster than waiting for feedback from others that proficiency suddenly starts to take off.

What Counts as Deliberate Practice?

[Macn2014] found that, “…deliberate practice explained 26% of the variance in performance for games, 21% for music, 18% for sports, 4% for education, and less than 1% for professions.” However, [Eric2016] critiqued this finding by saying, “Summing up every hour of any type of practice during an individual’s career implies that the impact of all types of practice activity on performance is equal—an assumption that…is inconsistent with the evidence.” To be effective, deliberate practice requires both a clear performance goal and immediate informative feedback, both of which are things teachers should strive for anyway.

Exercises

Concept Mapping (pairs/30)

Draw a concept map for something you would teach in five minutes. Trade with a partner and critique each other’s maps. Do they present concepts or surface detail? Which of the relationships in your partner’s map do you consider concepts and vice versa?

Concept Mapping (Again) (small groups/20)

Working in groups of 3–4, have each person independently draw a concept map showing their mental model of what goes on in a classroom. When everyone is done, compare the concept maps. Where do your mental models agree and disagree?

Enhancing Short-Term Memory (individual/5 minutes)

[Cher2007] suggests that the main reason people draw diagrams when they are discussing things is to enlarge their short-term memory: pointing at a wiggly bubble drawn a few minutes ago triggers recall of several minutes of debate. When you exchanged concept maps in the previous exercise, how easy was it for other people to understand what your map meant? How easy would it be for you if you set it aside for a day or two and then looked at it again?

That’s a Bit Self-Referential, Isn’t It? (whole class/30)

Working independently, draw a concept map for concept maps. Compare your concept map with those drawn by other people. What did most people include? What were the significant differences?

Noticing Your Blind Spot (small groups/10)

Elizabeth Wickes listed all the things you need to understand in order to read this one line of Python:

answers = ['tuatara', 'tuataras', 'bus', "lick"]The square brackets surrounding the content mean we’re working with a list (as opposed to square brackets immediately to the right of something, which is a data extraction notation).

The elements are separated by commas outside and between the quotes (rather than inside, as they would be for quoted speech).

Each element is a character string, and we know that because of the quotes. We could have number or other data types in here if we wanted; we need quotes because we’re working with strings.

We’re mixing our use of single and double quotes; Python doesn’t care so long as they balance around the individual strings.

Each comma is followed by a space, which is not required by Python, but which we prefer for readability.

Each of these details might be overlooked by an expert. Working in groups of 3–4, select something equally short from a lesson you have recently taught or learned and break it down to this level of detail.

What to Teach Next (individual/5)

Refer back to the concept map for photosynthesis in Figure 3.3. How many concepts and links are in the selected chunks? What would you include in the next chunk of the lesson and why?

The Power of Chunking (individual/5)

Look at Figure 3.4 for 10 seconds, then look away and try to write out your phone number with these symbols3. (Use a space for ’0’.) When you are finished, look at the alternative representation in Appendix 23. How much easier are the symbols to remember when the pattern is made explicit?

Cognitive Architecture

We have been talking about mental models as if they were real things, but what actually goes on in a learner’s brain when they’re learning? The short answer is that we don’t know; the longer answer is that we know a lot more than we used to. This chapter will dig a little deeper into what brains do while they’re learning and how we can leverage that to design and deliver lessons more effectively.

What’s Going On In There?

Figure 4.1 is a simplified model of human cognitive architecture. The core of this model is the separation between short-term and long-term memory discussed in Section 3.2. Long-term memory is like your basement: it stores things more or less permanently, but you can’t access its contents directly. Instead, you rely on your short-term memory, which is the cluttered kitchen table of your mind.

When you need something, your brain retrieves it from long-term memory and puts it in short-term memory. Conversely, new information that arrives in short-term memory has to be encoded to be stored in long-term memory. If that information isn’t encoded and stored, it’s not remembered and learning hasn’t taken place.

Information gets into short-term memory primarily through your verbal channel (for speech) and visual channel (for images)4. Most people rely primarily on their visual channel, but when images and words complement each other, the brain does a better job of remembering them both: they are encoded together, so recall of one later on helps trigger recall of the other.

Linguistic and visual input are processed by different parts of the human brain, and linguistic and visual memories are stored separately as well. This means that correlating linguistic and visual streams of information takes cognitive effort: when someone reads something while hearing it spoken aloud, their brain can’t help but check that it’s getting the same information on both channels.

Learning is therefore increased when information is presented simultaneously in two different channels, but is reduced when that information is redundant rather than complementary, a phenomenon called the split-attention effect [Maye2003]. For example, people generally find it harder to learn from a video that has both narration and on-screen captions than from one that has either the narration or the captions but not both, because some of their attention has to be devoted to checking that the narration and the captions agree with each other. Two notable exceptions to this are people who do not yet speak the language well and people with hearing impairments or other special needs, both of whom may find that the value of the redundant information outweighs the extra processing effort.

Piece by Piece

The split attention effect explains why it’s more effective to draw a diagram piece by piece while teaching than to present the whole thing at once. If parts of the diagram appear at the same time as things are being said, the two will be correlated in the learner’s memory. Pointing at part of the diagram later is then more likely to trigger recall of what was being said when that part was being drawn.

The split-attention effect does not mean that learners shouldn’t try to reconcile multiple incoming streams of information—after all, this is what they have to do in the real world [Atki2000]. Instead, it means that instruction shouldn’t require people to do it while they are first mastering unit skills; instead, using multiple sources of information simultaneously should be treated as a separate learning task.

Not All Graphics are Created Equal

[Sung2012] presents an elegant study that distinguishes seductive graphics (which are highly interesting but not directly relevant to the instructional goal), decorative graphics (which are neutral but not directly relevant to the instructional goal), and instructive graphics (which are directly relevant to the instructional goal). Learners who received any kind of graphic gave material higher satisfaction ratings than those who didn’t get graphics, but only learners who got instructive graphics actually performed better.

Similarly, [Stam2013,Stam2014] found that having more information can actually lower performance. They showed children pictures, pictures and numbers, or just numbers for two tasks. For some, having pictures or pictures and numbers outperformed having numbers only, but for others, having pictures outperformed pictures and numbers, which outperformed just having numbers.

Cognitive Load

In [Kirs2006], Kirschner, Sweller and Clark wrote:

Although unguided or minimally guided instructional approaches are very popular and intuitively appealing…these approaches ignore both the structures that constitute human cognitive architecture and evidence from empirical studies over the past half-century that consistently indicate that minimally guided instruction is less effective and less efficient than instructional approaches that place a strong emphasis on guidance of the student learning process. The advantage of guidance begins to recede only when learners have sufficiently high prior knowledge to provide “internal” guidance.

Beneath the jargon, the authors were claiming that having learners ask their own questions, set their own goals, and find their own path through a subject is less effective than showing them how to do things step by step. The “choose your own adventure” approach is known as inquiry-based learning, and is intuitively appealing: after all, who would argue against having learners use their own initiative to solve real-world problems in realistic ways? However, asking learners to do this in a new domain overloads them by requiring them to master a domain’s factual content and its problem-solving strategies at the same time.

More specifically, cognitive load theory proposed that people have to deal with three things when they’re learning:

- Intrinsic load

is what people have to keep in mind in order to absorb new material.

- Germane Load

is the (desirable) mental effort required to link new information to old, which is one of the things that distinguishes learning from memorization.

- Extraneous Load

is anything that distracts from learning.

Cognitive load theory holds that people have to divide a fixed amount of working memory between these three things. Our goal as teachers is to maximize the memory available to handle intrinsic load, which means reducing the germane load at each step and eliminating the extraneous load.

Parsons Problems

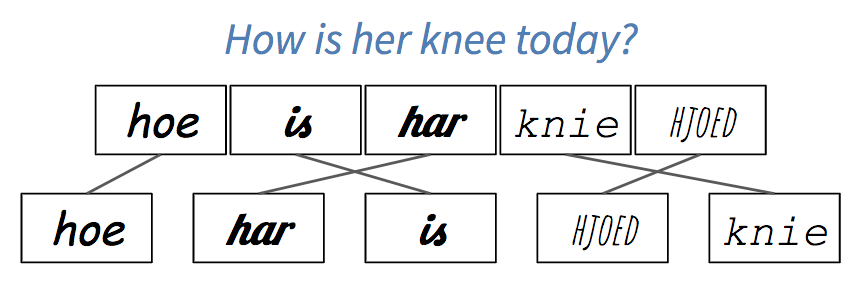

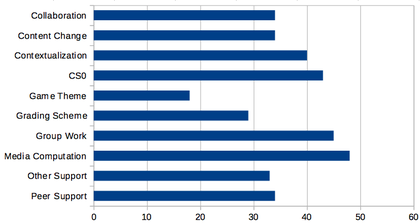

One kind of exercise that can be explained in terms of cognitive load is often used when teaching languages. Suppose you ask someone to translate the sentence, “How is her knee today?” into Frisian. To solve the problem, they need to recall both vocabulary and grammar, which is a double cognitive load. If you ask them to put “hoe,” “har,” “is,” “hjoed,” and “knie” in the right order, on the other hand, you are allowing them to focus solely on learning grammar. If you write these words in five different fonts or colors, though, you have increased the extraneous cognitive load, because they will involuntarily (and possibly unconsciously) expend some effort trying to figure out if the differences are meaningful (Figure 4.2).

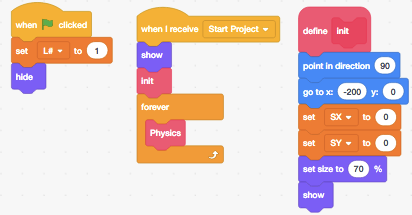

The coding equivalent of this is called a Parsons Problem5 [Pars2006]. When teaching people to program, you can give them the lines of code they need to solve a problem and ask them to put them in the right order. This allows them to concentrate on control flow and data dependencies without being distracted by variable naming or trying to remember what functions to call. Multiple studies have shown that Parsons Problems take less time for learners to do but produce equivalent educational outcomes [Eric2017].

Faded Examples

Another type of exercise that can be explained in terms of cognitive load is to give learners a series of faded examples. The first example in a series presents a complete use of a particular problem-solving strategy. The next problem is of the same type, but has some gaps for the learner to fill in. Each successive problem gives the learner less scaffolding, until they are asked to solve a complete problem from scratch. When teaching high school algebra, for example, we might start with this:

| (4x + 8)/2 | = | 5 |

| 4x + 8 | = | 2 * 5 |

| 4x + 8 | = | 10 |

| 4x | = | 10 - 8 |

| 4x | = | 2 |

| x | = | 2 / 4 |

| x | = | 1 / 2 |

and then ask learners to solve this:

| (3x - 1)*3 | = | 12 |

| 3x - 1 | = | _ / _ |

| 3x - 1 | = | 4 |

| 3x | = | _ |

| x | = | _ / 3 |

| x | = | _ |

and this:

| (5x + 1)*3 | = | 4 |

| 5x + 1 | = | _ |

| 5x | = | _ |

| x | = | _ |

and finally this:

| (2x + 8)/4 | = | 1 |

| x | = | _ |

A similar exercise for teaching Python might start by showing learners how to find the total length of a list of words:

# total_length(["red", "green", "blue"]) => 12

define total_length(list_of_words):

total = 0

for word in list_of_words:

total = total + length(word)

return totaland then ask them to fill in the blanks in this (which focuses their attention on control structures):

# word_lengths(["red", "green", "blue"]) => [3, 5, 4]

define word_lengths(list_of_words):

list_of_lengths = []

for ____ in ____:

append(list_of_lengths, ____)

return list_of_lengthsThe next problem might be this (which focuses their attention on updating the final result):

# join_all(["red", "green", "blue"]) => "redgreenblue"

define join_all(list_of_words):

joined_words = ____

for ____ in ____:

____

return joined_wordsLearners would finally be asked to write an entire function on their own:

# make_acronym(["red", "green", "blue"]) => "RGB"

define make_acronym(list_of_words):

____Faded examples work because they introduce the problem-solving strategy piece by piece: at each step, learners have one new problem to tackle, which is less intimidating than a blank screen or a blank sheet of paper (Section 9.11). It also encourages learners to think about the similarities and differences between various approaches, which helps create the linkages in their mental models that help retrieval.

The key to constructing a good faded example is to think about the problem-solving strategy it is meant to teach. For example, the programming problems above all use the accumulator design pattern, in which the results of processing items from a collection are repeatedly added to a single variable in some way to create the final result.

Cognitive Apprenticeship

An alternative model of learning and instruction that also uses scaffolding and fading is cognitive apprenticeship, which emphasizes the way in which a master passes on skills and insights to an apprentice. The master provides models of performance and outcomes, then coaches novices by explaining what they are doing and why [Coll1991,Casp2007]. The apprentice reflects on their own problem solving, e.g. by thinking aloud or critiquing their own work, and eventually explores problems of their own choosing.

This model tells us that teachers should present several examples when presenting a new idea so that learners can see what to generalize, and that we should vary the form of the problem to make it clear what are and aren’t superficial features6. Problems should be presented in real-world contexts, and we should encourage self-explanation to help learners organize and make sense of what they have just been taught (Section 5.1).

Labeled Subgoals

Labeling subgoals means giving names to the steps in a step-by-step description of a problem-solving process. [Marg2016,Morr2016] found that learners with labeled subgoals solved Parsons Problems better than learners without, and the same benefit is seen in other domains [Marg2012]. Returning to the Python example used earlier, the subgoals in finding the total length of a list of words or constructing an acronym are:

Create an empty value of the type to be returned.

Get the value to be added to the result from the loop variable.

Update the result with that value.

Labeling subgoals works because grouping related steps into named chunks (Section 3.2) helps learners distinguish what’s generic from what is specific to the problem at hand. It also helps them build a mental model of that kind of problem so that they can solve other problems of that kind, and gives them a natural opportunity for self-explanation (Section 5.1).

Minimal Manuals

The purest application of cognitive load theory may be John Carroll’s minimal manual [Carr1987,Carr2014]. Its starting point is a quote from a user: “I want to do something, not learn how to do everything.” Carroll and colleagues redesigned training to present every idea as a single-page self-contained task: a title describing what the page was about, step-by-step instructions of how to do just one thing (e.g. how to delete a blank line in a text editor), and then several notes on how to recognize and debug common problems. They found that rewriting training materials this way made them shorter overall, and that people using them learned faster. Later studies confirmed that this approach outperformed the traditional approach regardless of prior experience with computers [Lazo1993]. [Carr2014] summarized this work by saying:

Our “minimalist” designs sought to leverage user initiative and prior knowledge, instead of controlling it through warnings and ordered steps. It emphasized that users typically bring much expertise and insight to this learning, for example, knowledge about the task domain, and that such knowledge could be a resource to instructional designers. Minimalism leveraged episodes of error recognition, diagnosis, and recovery, instead of attempting to merely forestall error. It framed troubleshooting and recovery as learning opportunities instead of as aberrations.

Other Models of Learning

Critics of cognitive load theory have sometimes argued that any result can be justified after the fact by labeling things that hurt performance as extraneous load and things that don’t as intrinsic or germane. However, instruction based on cognitive load theory is undeniably effective. For example, [Maso2016] redesigned a database course to remove split attention and redundancy effects and to provide worked examples and sub-goals. The new course reduced the exam failure rate by 34% and increased learner satisfaction.

A decade after the publication of [Kirs2006], a growing number of people believe that cognitive load theory and inquiry-based approaches are compatible if viewed in the right way. [Kaly2015] argues that cognitive load theory is basically micro-management of learning within a broader context that considers things like motivation, while [Kirs2018] extends cognitive load theory to include collaborative aspects of learning. As with [Mark2018] (discussed in Section 5.1), researchers’ perspectives may differ, but the practical implementation of their theories often wind up being the same.

One of the challenges in educational research is that what we mean by “learning” turns out to be complicated once you look beyond the standardized Western classroom. Two specific perspectives from educational psychology have influenced this book. The one we have used so far is cognitivism, which focuses on things like pattern recognition, memory formation, and recall. It is good at answering low-level questions, but generally ignores larger issues like, “What do we mean by ‘learning’?” and, “Who gets to decide?” The other is situated learning, which focuses on bringing people into a community and recognizes that teaching and learning are always rooted in who we are and who we aspire to be. We will discuss it in more detail in Chapter 13.

The Learning Theories website and [Wibu2016] have good summaries of these and other perspectives. Besides cognitivism, those encountered most frequently include behaviorism (which treats education as stimulus/response conditioning), constructivism (which considers learning an active process during which learners construct knowledge for themselves), and connectivism (which holds that knowledge is distributed, that learning is the process of navigating, growing, and pruning connections, and which emphasizes the social aspects of learning made possible by the internet). These perspectives can help us organize our thoughts, but in practice, we always have to try new methods in the class, with actual learners, in order to find out how well they balance the many forces in play.

Exercises

Create a Faded Example (pairs/30)

It’s very common for programs to count how many things fall into different categories: for example, how many times different colors appear in an image, or how many times different words appear in a paragraph of text.

Create a short example (no more than 10 lines of code) that shows people how to do this, and then create a second example that solves a similar problem in a similar way but has a couple of blanks for learners to fill in. How did you decide what to fade out? What would the next example in the series be?

Define the audience for your examples. For example, are these beginners who only know some basics programming concepts? Or are these learners with some experience in programming?

Show your example to a partner, but do not tell them what level you think it is for. Once they have filled in the blanks, ask them to guess the intended level.

If there are people among the trainees who don’t program at all, try to place them in different groups and have them play the part of learners for those groups. Alternatively, choose a different problem domain and develop a faded example for it.

Classifying Load (small groups/15)

Choose a short lesson that a member of your group has taught or taken recently.

Make a point-form list of the ideas, instructions, and explanations it contains.

Classify each as intrinsic, germane, or extraneous. What did you all agree on? Where did you disagree and why?

(The exercise “Noticing Your Blind Spot” in Section 3.4 will give you an idea of how detailed your point-form list should be.)

Create a Parsons Problem (pairs/20)

Write five or six lines of code that does something useful, jumble them, and ask your partner to put them in order. If you are using an indentation-based language like Python, do not indent any of the lines; if you are using a curly-brace language like Java, do not include any of the curly braces. (If your group includes people who aren’t programmers, use a different problem domain, such as making banana bread.)

Minimal Manuals (individual/20)

Write a one-page guide to doing something that your learners might encounter in one of your classes, such as centering text horizontally or printing a number with a certain number of digits after the decimal point. Try to list at least three or four incorrect behaviors or outcomes the learner might see and include a one- or two-line explanation of why each happens and how to correct it.

Cognitive Apprenticeship (pairs/15)

Pick a coding problem that you can do in two or three minutes and think aloud as you work through it while your partner asks questions about what you’re doing and why. Do not just explain what you’re doing, but also why you’re doing it, how you know it’s the right thing to do, and what alternatives you’ve considered but discarded. When you are done, swap roles with your partner and repeat the exercise.

Worked Examples (pairs/15)

Seeing worked examples helps people learn to program faster than just writing lots of code [Skud2014], and deconstructing code by tracing it or debugging it also increases learning [Grif2016]. Working in pairs, go through a 10–15 line piece of code and explain what every statement does and why it is necessary. How long does it take? How many things do you feel you need to explain per line of code?

Critiquing Graphics (individual/30)

[Maye2009,Mill2016a] presents six principles for good teaching graphics:

- Signalling:

visually highlight the most important points so that they stand out from less-critical material.

- Spatial contiguity:

place captions as close to the graphics as practical to offset the cost of shifting between the two.

- Temporal contiguity:

present spoken narration and graphics as close in time as practical. (Presenting both at once is better than presenting them one after another.)

- Segmenting:

when presenting a long sequence of material or when learners are inexperienced with the subject, break the presentation into short segments and let learners control how quickly they advance from to the next.

- Pre-training:

if learners don’t know the major concepts and terminology used in your presentation, teach just those concepts and terms beforehand.

- Modality:

people learn better from pictures plus narration than from pictures plus text, unless they are non-native speakers or there are technical words or symbols.

Choose a video of a lesson or talk online that uses slides or other static presentations and rate its graphics as “poor,” “average,” or “good” according to these six criteria.

Review

Individual Learning

Previous chapters have explored what teachers can do to help learners. This chapter looks at what learners can do for themselves by changing their study strategies and getting enough rest.

The most effective strategy is to switch from passive learning to active learning [Hpl2018], which significantly improves performance and reduces failure rates [Free2014]:

| Passive | Active |

| Read about something | Do exercises |

| Watch a video | Discuss a topic |

| Attend a lecture | Try to explain it |

Referring back to our simplified model of cognitive architecture (Figure 4.1), active learning is more effective because it keeps new information in short-term memory longer, which increases the odds that it will be encoded successfully and stored in long-term memory. And by using new information as it arrives, learners build or strengthen ties between that information and what they already know, which in turn increases the chances that they will be able to retrieve it later.

The other key to getting more out of learning is metacognition, or thinking about one’s own thinking. Just as good musicians listen to their own playing and good teachers reflect on their teaching (Chapter 8), learners will learn better and faster if they make plans, set goals, and monitor their progress. It’s difficult for learners to master these skills in the abstract—just telling them to make plans doesn’t have any effect—but lessons can be designed to encourage good study practices, and drawing attention to these practices in class helps learners realize that learning is a skill they can improve like any other [McGu2015,Miya2018].

The big prize is transfer of learning, which occurs when one thing we have learned helps us learn other things more quickly. Researchers distinguish between near transfer, which occurs between similar or related areas like fractions and decimals in mathematics, and far transfer, which occurs between dissimilar domains—for example, the idea that learning to play chess will help mathematical reasoning or vice versa.

Near transfer undoubtedly occurs—no kind of learning beyond simple memorization could occur if it didn’t—and teachers leverage it all the time by giving learners exercises that are similar to material that has just been presented in a lesson. However, [Sala2017] analyzed many studies of far transfer and concluded that:

…the results show small to moderate effects. However, the effect sizes are inversely related to the quality of the experimental design… We conclude that far transfer of learning rarely occurs.

When far transfer does occur, it seems to happen only once a subject has been mastered [Gick1987]. In practice, this means that learning to program won’t help you play chess and vice versa.

Six Strategies

Psychologists study learning in a wide variety of ways, but have reached similar conclusions about what actually works [Mark2018]. The Learning Scientists have catalogued six of these strategies and summarized them in a set of downloadable posters. Teaching these strategies to learners, and mentioning them by name when you use them in class, can help them learn how to learn faster and better [Wein2018a,Wein2018b].

Spaced Practice

Ten hours of study spread out over five days is more effective than two five-hour days, and far better than one ten-hour day. You should therefore create a study schedule that spreads study activities over time: block off at least half an hour to study each topic each day rather than trying to cram everything in the night before an exam [Kang2016].

You should also review material after each class, but not immediately after—take at least a half-hour break. When reviewing, be sure to include at least a little bit of older material: for example, spend twenty minutes looking over notes from that day’s class and then five minutes each looking over material from the previous day and from a week before. Doing this also helps you catch any gaps or mistakes in previous sets of notes while there’s still time to correct them or ask questions: it’s painful to realize the night before the exam that you have no idea why you underlined “Demodulate!!” three times.

When reviewing, make notes about things that you had forgotten: for example, make a flash card for each fact that you couldn’t remember or that you remembered incorrectly [Matt2019]. This will help you focus the next round of study on things that most need attention.

The Value of Lectures

According to [Mill2016a], “The lectures that predominate in face-to-face courses are relatively ineffective ways to teach, but they probably contribute to spacing material over time, because they unfold in a set schedule over time. In contrast, depending on how the courses are set up, online students can sometimes avoid exposure to material altogether until an assignment is nigh.”

Retrieval Practice

The limiting factor for long-term memory is not retention (what is stored) but recall (what can be accessed). Recall of specific information improves with practice, so outcomes in real situations can be improved by taking practice tests or summarizing the details of a topic from memory and then checking what was and wasn’t remembered. For example, [Karp2008] found that repeated testing improved recall of word lists from 35% to 80%.

Recall is better when practice uses activities similar to those used in testing. For example, writing personal journal entries helps with multiple-choice quizzes, but less than doing practice quizzes [Mill2016a]. This phenomenon is called transfer-appropriate processing.

One way to exercise retrieval skills is to solve problems twice. The first time, do it entirely from memory without notes or discussion with peers. After grading your own work against a rubric supplied by the teacher, solve the problem again using whatever resources you want. The difference between the two shows you how well you were able to retrieve and apply knowledge.

Another method (mentioned above) is to create flash cards. Physical cards have a question or other prompt on one side and the answer on the other, and many flash card apps are available for phones. If you are studying as part of a group, swapping flash cards with a partner helps you discover important ideas that you may have missed or misunderstood.